Table of Contents

Home / Blog / AI/ML

Top Foundations and Trends in Machine Learning

November 28, 2024

November 28, 2024

Machine Learning is currently refining industries and changing the way we interact with data. Many businesses have recognized this and are using these machine-learning capabilities to boost efficiency and drive innovation. Therefore, if you’re to survive in this industry, you must understand the foundational principles and emerging trends

If you’re looking to catch up on the current trends in machine learning, you’re in luck.

The field is buzzing with so many advancements and machine learning trends as businesses strive to make their processes more efficient and effective.

This article itemizes the key trends in machine learning while also exploring key concepts like supervised, unsupervised, and semi-supervised learning.

Without further ado, let’s explore 7 top foundations and trends in machine learning.

Related Article: Artificial Intelligence vs Machine Learning

What is Machine Learning?

This is a subset of artificial intelligence concerned with enabling computers or machines to learn from data so that they can autonomously improve their performance over time without being explicitly programmed for each and every task. Although it sounds pretty futuristic, but machine learning is totally about teaching machines to notice patterns independently and make decisions based on the information they receive.

Wondering how it works?

A machine learning model starts the process by collecting relevant data and information from different sources. This data now becomes the foundation for training the model. The machine learning model gets used to the data and identifies certain trends and patterns that will be used to make decisions when it starts dealing with real-world data.

Machine learning models can be trained via three major approaches. These are:

- Supervised Learning: This method involves training machine learning models by inputting labeled datasets. A labeled dataset is one where the input data is already paired with the correct output.

For example, imagine training a model by inputting images of cats and dogs that are already labeled, i.e., the images that are cats or dogs have been indicated accordingly so that the model learns from that information to identify any other new cat or dog images it sees later.

- Unsupervised Learning: This method involves training machine learning models by inputting unlabeled datasets. An unlabeled dataset is one where the input data isn’t paired with any correct output. With this dataset, it is left for the system to identify recurring patterns and group the datasets according to the recognized pattern

For example, unsupervised learning might cluster customers based on purchasing behavior without any prior labels.

- Semi-supervised Learning: This method trains the machine learning model with both labeled and unlabeled data. It does this by using a small amount of labeled data to help guide the learning process on a larger set of unlabeled data.

This approach often comes in handy whenever the labeling approach becomes expensive or time-consuming.

Using any of these approaches, machine learning has the potential to make different industries grow faster. In fact, its ability to help businesses make data-driven decisions is one of its most widespread allures.

At the forefront of these advancements is Debut Infotech, a leading machine learning development company offering businesses the opportunity to hire artificial intelligence developers. With a team of skilled experts, we help enterprises leverage AI-powered technologies to create robust, scalable, and efficient solutions tailored to their unique needs.

Nonetheless, as this field grows, many industry players are continuously searching for ways to amplify their efforts further. This has led to some emerging trends that we’ll discuss in the next section.

Advance Your Business With Expert AI Solutions

The right AI solutions simplify complex tasks and accelerate digital transformation.

Our team of experts knows what is right for your business.

7 Top Trends in Machine Learning

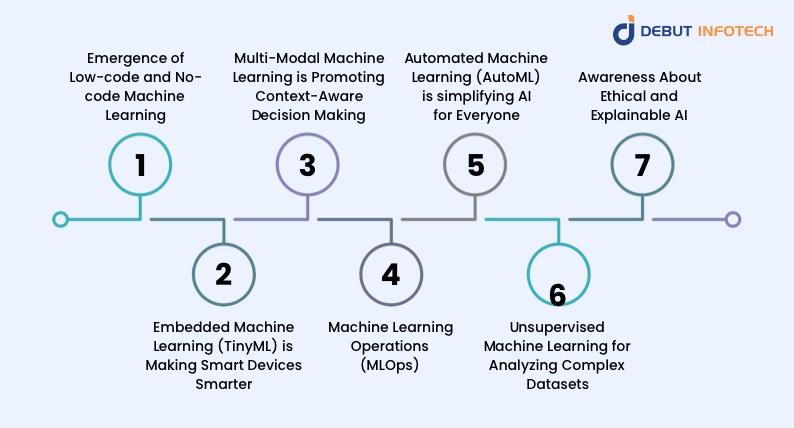

The following are the 7 top trends the field of machine learning is currently experiencing across several industries.

1. Emergence of Low-code and No-code Machine Learning

Regardless of technical expertise, the emergence of low-code and no-code machine learning platforms is making it easy for anyone to develop AI applications. These platforms have a graphical user interface (GUI) that contains pre-built components such as data preprocessing tools, algorithms, and model evaluation metrics.

So, even if users don’t have any technical machine learning knowledge, they can easily drag and drop these components into a workflow, and Voila! They’ll be able to create sophisticated machine learning models without writing any code.

Popular examples of these low- or no-code solutions include the Amazon SageMaker, Apple CreateML, Google AutoML, Google Teachable Machine, and so on. By reducing the need for traditional coding expertise, these low- or no-code solutions significantly reduce development costs. Due to these benefits, low-code and no-code machine learning platforms are becoming increasingly popular.

However, although these solutions can perfectly execute simpler machine learning projects like customer retention analysis and dynamic pricing, they may find it difficult to execute complex projects that require more customizations and scalability.

Wondering why?

Advanced machine learning projects involve effective data analysis and model optimizations, and only skilled machine learning engineers can pull this off. Nonetheless, low and no-code platforms are still very useful for different projects right now, and as research intensifies, their capabilities are likely to increase in the coming years.

2. Embedded Machine Learning (TinyML) is Making Smart Devices Smarter

TinyML or Tiny Machine Learning is a type of Machine Learning that is concerned with the deployment of machine learning models on small, low-power devices, known as microcontrollers. This subset of machine learning is at the intersection of Internet of Things (IoT) and machine learning.

So, what does TinyML do?

It increases the efficiency and responsiveness of traditional IoT systems by embedding AI capabilities directly into them. Most IoT systems comprising microcontrollers and low-power chips often rely on sending their information to a centralized server for processing before they can make smart decisions.

However, with TinyML, the machine learning algorithm is already embedded in the IoT system or microcontrollers. As a result, the data analysis occurs on the device itself, thus reducing latency or delays. More importantly, with TinyML, IoT systems can process information faster and make quicker real-time decisions.

So, how is this beneficial?

TinyML can benefit IoT systems in various industries, such as healthcare management or industrial system maintenance, where immediate responses are vital.

Despite all these potential benefits, certain challenges, like the skills required to build these super-smart systems, still prevent the adoption of TinyML systems. To create an effective TinyML solution, you need a perfect blend of expertise in Machine Learning and Embedded Systems—a hard combination to come by.

Nonetheless, regardless of these challenges, TinyML presents immense opportunities, and many people in the machine learning ecosystem are already on to it.

3. MultiModal Machine Learning is Promoting Context-Aware Decision Making

The whole point of artificial intelligence systems is to learn, make decisions faster, and perform specific tasks by learning from data. Now, most traditional AI systems do this by using data from a single source. When you liken it to human perception, it’s like learning and making decisions faster by relying on one source of information, such as sight. So, you simply use visual elements.

But human perception doesn’t work like that, does it?

Human perception uses sight, sound, touch, and other data sources. That’s exactly what AI systems are now trying to mimic with multimodal machine learning. As the name implies, multimodal machine learning is a major improvement in AI systems characterized by the ability to process and integrate information from multiple sources at the same time. This involves the combination of different data types, such as text, images, audio, and video.

So, why is it important for AI systems to integrate data from multiple sources?

The simple answer to this question is context awareness in decision-making. Let’s compare two AI systems: One integrates data from a single source, say, auditory data, while the other analyzes both visual and auditory data. If both these systems were built to identify objects, which do you think will perform the task with more accuracy?

Clearly, that’ll be the multimodal example. That’s why we’re now noticing an increase in the application of multimodal machine learning across various sectors where complex analysis is important.

For instance, autonomous vehicles benefit from context-aware decision-making because they need to interpret sensor data from cameras and microphones to drive safely. Another application can be found in healthcare settings, where patient records need to be integrated with medical imaging and genomic data to make more accurate diagnoses.

Multimodal Machine Learning is hot right now, and different organizations have recognized the need to enhance user experience and operational efficiency using this awesome piece of technological advancements.

Related Article: What is Generative Adversarial Networks

4. Machine Learning Operations (MLOps)

Do you know how DevOps combines different practices and tools to help organizations deliver applications and services faster than traditional software development? Something similar is now being applied when developing and launching machine learning models. MLOps combines machine learning with DevOps principles to make machine learning model deployment easier, faster, and more scalable.

Just like DevOps helps developers and operations collaborate better to improve software development, MLOps connects data scientists, IT, and the operations team to ensure that ML models are not just created but effectively integrated into business processes.

Here’s how it works:

First, data scientists build a machine learning model tailored to a specific business objective. Next, they train the model by acquiring data. After training and validating the model for accuracy, it is deployed into a production environment, where it can start making predictions based on real-world data. This is where MLOps tends to improve its efficiency because it ensures continuous monitoring and improvement.

MLOps systems track the AI models in real time to notice if any issue arises. So, assuming the model’s precision accuracy reduces, MLOps triggers the established protocols to quickly retrain the model and adjust it without disrupting the entire business process. As a result of this proactive approach, machine learning models have become more reliable and cost-effective because businesses don’t have to incur costs associated with downtime.

This adoption of MLOps practices is one of the fastest-rising trends in Machine Learning recently because organizations now, more than ever, want to scale AI capabilities in their businesses. And that’s exactly what MLOps helps them do by standardizing workflows and automating repetitive tasks in the Machine Learning project lifecycle.

5. Automated Machine Learning (AutoML) is simplifying AI for Everyone

Earlier, we highlighted the rise of low-code and no-code solutions in helping individuals with little or no machine-learning expertise to create models. Automated Machine Learning (AutoML) is another emerging technology that serves a similar purpose by providing methods and processes that make machine learning available to non-machine learning experts.

There are so many complex processes involved in building and deploying ML models. Some of them include data preprocessing, feature selection, model training, and evaluation. Normally, data scientists perform these tasks anytime they’re building a new model, and they require a significant amount of time and focus. However, with AutoML, most of these processes can be automated, significantly speeding up the development cycle. Data labeling is a good example of a task that most data scientists spend so much time on when building an ML model. This task has been known to be very labor-intensive and error-prone. AutoML eliminates this risk by automating the process.

Many businesses recognize these potential benefits and want to be more efficient. This is reflected in the AutoML market size, which was valued at a whopping 1.4 billion USD in 2023 and is expected to grow at a compound annual growth rate (CAGR) of 30% between 2024 and 2032. Some of the most popular products that most businesses are using to integrate machine learning into their operations include Google Cloud AutoML and H2O.ai.

Currently, there’s no doubt that AutoML boosts the productivity of these machine-learning operations while reducing their operational costs. However, it also presents certain challenges, such as ensuring model accuracy and addressing potential biases in automated processes. Regardless of these challenges, AutoML offers so many positives, and it is here to stay.

6. Unsupervised Machine Learning for Analyzing Complex Datasets

Here’s some perspective:

The ultimate goal of most machine learning models or solutions is to operate effectively without human intervention. In recent times, organizations with vast amounts of unstructured data have been trying to achieve this goal by using unsupervised machine learning.

What is unsupervised machine learning?

This is an innovative artificial intelligence approach that allows machines to analyze and interpret large datasets without needing any labels or human guidance. It is the direct opposite to supervised learning which involves telling the machine learning model what to look for in a dataset. Unsupervised learning, instead, focuses on unlabeled datasets, thus allowing the systems to identify patterns and group similar data points on their own based on the shared characteristics they can identify.

So, how does this work?

Unsupervised learning uses a popular technique known as clustering. Clustering algorithms function by grouping data points that exhibit similar features, thus enabling the modal to uncover hidden structures and patterns within the data.

This technology can be very beneficial in many applications across different industries. For example, in retail settings, unsupervised learning can be used to analyze customer purchasing behavior and group customers into different segments based on their shopping habits. Based on this segmentation, businesses can now tailor their marketing strategies to improve customer engagement.

That’s not all.

Unsupervised learning can also improve decision-making by automating data analysis. As machines autonomously draw conclusions from complex datasets, they can quickly identify trends and anomalies that a human analyst might miss.

Related Read: A Complete Guide to Machine Learning Consulting

7. Awareness About Ethical and Explainable AI

As AI adoption increases, so many businesses are not only concerned about AI systems performing their tasks effectively. Instead, they are also more concerned about ensuring these systems are fair, transparent, and accountable.

Ethics in AI is all about eliminating biases from algorithms so that AI systems do not discriminate against certain individuals or groups while aligning with human values. This involves addressing issues like data privacy, security, and the social implications of deploying AI solutions. For example, companies must think about how their algorithms might impact different demographic groups so that they don’t reinforce any existing inequalities.

On the other hand, explainable AI refers to AI systems that can provide clear and understandable reasons for their decisions. This trend is more rampant in fields like healthcare, finance, and criminal justice, where users need to trust the decisions made by these AI systems at all times. By making algorithms more interpretable, organizations can empower users to understand and contest decisions when necessary.

Microsoft’s Responsible AI Standard is one of the most common frameworks for guiding this practice, as it promotes relevant principles like inclusiveness, reliability, and accountability. The key point here is that both businesses and individuals in machine learning are more conscious of ethics when deploying solutions. They now take the social impact and performance of their AI solutions into account when building them or assessing their effectiveness, and that’s a good thing!

Develop Innovative AI Models Today

From GPT-4o and Claude to Llama-3 and Midjourney, our experts at Debut Infotech leverage a number of advanced AI models to deliver cutting edge solutions.

Conclusion

Machine Learning is experiencing constant advancements meant to help ML models become better, more effective, and more ethical.

While low-code, no-code, and AutoML make it easy for non-machine learning experts to build machine learning applications, Multi-Modal Machine learning applications are promoting better results and efficiency in ML systems.

Furthermore, embedded machine learning (tinyML) is also making smart devices smarter by incorporating ML models directly into microcontrollers.

Amid these advancements, Debut Infotech, a leading machine learning development company, is at the forefront of innovation. Our expertise helps businesses leverage cutting-edge technologies like AutoML, TinyML, and multi-modal ML systems to create tailored solutions that drive efficiency and success.

With so much happening in the Machine Learning space, we’re here to help shape the future of intelligent systems. It’s going to be an interesting future of ai, and we’re ready to lead the way.

Frequently Asked Questions

Foundation models in machine learning refer to deep learning neural networks that have been trained on large datasets. Popular examples include Amazon Titan, GPT, AI21 Jurassic, Claude, and BERT.

Yes, GPT-4 is a foundation model.

The four machine learning models include supervised learning, unsupervised learning, semi-supervised learning, and reinforcement learning.

The foundations and trends in machine learning in 2024 include key advancements in low and no-code solutions, AutoML, MLOps, widespread use of unsupervised learning, and greater awareness about ethical and explainable AI.

Yes, Open AI is a foundation model trained on large amounts of data and can be used for multiple purposes.

Talk With Our Expert

Our Latest Insights

USA

2102 Linden LN, Palatine, IL 60067

+1-703-537-5009

[email protected]

UK

Debut Infotech Pvt Ltd

7 Pound Close, Yarnton, Oxfordshire, OX51QG

+44-770-304-0079

[email protected]

Canada

Debut Infotech Pvt Ltd

326 Parkvale Drive, Kitchener, ON N2R1Y7

+1-703-537-5009

[email protected]

INDIA

Debut Infotech Pvt Ltd

C-204, Ground floor, Industrial Area Phase 8B, Mohali, PB 160055

9888402396

[email protected]

Leave a Comment