Table of Contents

Home / Blog / AI/ML

The Crucial Role of Data in ML Model Training

April 14, 2025

April 14, 2025

Machine learning depends completely on data as its basic fuel. Understanding what are the types of data in AI is critical, as these several forms of data such as facts, text, symbols, images, and videos exist in their raw state before processing begins. The transformation of raw data through processing operations produces information.

Without the required data, machine learning becomes an empty shell because mere programming code cannot achieve either intelligence or purpose. It is this data that allows machines to accomplish advanced tasks beyond what human imagination predicted.

Although the success of machine learning depends on data, machines lack a built-in ability to comprehend the meanings behind the data they process. They don’t understand why the letter ‘a’ looks the way it does, or why the word ‘this’ carries a particular meaning.

Similarly, most people eat food without truly understanding its nutritional breakdown or how it’s processed by the body, we simply eat because we need to. In the same way, machines “consume” data without comprehension. They lack the ability to interpret context or meaning from data.

They only identify patterns and correlations inside the data while neglecting to understand its fundamental nature. Data is to machines what fuel is to a car. It powers the system, but the car has no idea what fuel is or where it comes from.

At the end of the day, all machines really do is look for connections between pieces of data.This process is driven by the machine learning model, which deciphers correlations but never grasps context.

In this article, we’ll explore why data is so important in machine learning, and uncover why machines don’t actually understand the data itself but instead, focus on identifying relationships within it.

Without further ado let’s get started!

What You Should Know About Data in ML Model Training

Machine learning (ML) and artificial intelligence (AI) reach peak performance by finding data patterns and using decision-making methods dependent on data. However, to achieve this, models require foundation knowledge of normal data patterns. The training data serves as an instructional background that enables models to understand newly introduced information.

Spam email detection serves as a practical example where ML models learn to identify this form of unwanted correspondence. Spam detection through ML models differs substantially from human instinct because these models require training to identify spam content. Training is essential for the identification of these spam contents. The training data consists of numerous labeled emails which include both spam content and authentic emails. Each email contains various features such as word choice, formatting, sender information, and presence of links or attachments.

Training data needs to be both comprehensive and varied emphasizing machine learning data quality to avoid biases or gaps. For spam detection, this could mean including emails from different industries, with different styles, tones, and structures. The data selection system needs to identify spam characteristics (eg. promotional language abuse and suspicious URLs) yet retain enough variety to include all forms of email communication.

Similarly, a ML model must initially analyze historical sensor data to understand patterns of machinery performance before it can identify equipment failure indicators in predictive maintenance. After training the model, it can detect irregular vibration signals as anomalies and simultaneously predict the replacement period of specific parts.

A model that completes model training and validation successfully and passes testing can be activated within real-world deployments. In the case of spam detection, a trained model can become part of email services to automatically sort out incoming junk messages while enhancing user experience and inbox hygiene. This integration highlights the role of Machine Learning in Business Intelligence, where data-driven decisions optimize operational efficiency.

Power Your Models with Data That Delivers Results

Just like a chef needs fresh ingredients, your ML models thrive on quality data. But sourcing, cleaning, and structuring it can be a lot! That’s where we step in. Dive into our data curation and training solutions to see how we turn raw data into your model’s secret sauce.

How to train a machine learning model

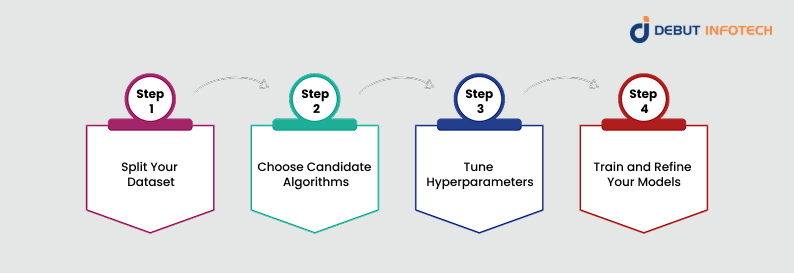

Training a machine learning model involves a structured, repeatable workflow that ensures you get the most value from your data and your data science team’s time. Before jumping into the actual training, it’s important to:

- Clearly define the problem you’re trying to solve.

- Gather and explore your dataset, following best practices for how to collect data for ML to ensure relevance, diversity, and representativeness for your problem domain.

- Clean and preprocess the data to prepare it for modeling.

Afterwards, you must choose applicable algorithms (considering distinctions like supervised learning vs unsupervised learning) and determine their hyperparameter settings and divide data into training and testing sections.

Step 1: Split Your Dataset

Your dataset functions as a constrained resource because you must use different data for training and testing your model. When testing models with the same data used for training there is a risk of misleading results because models might begin to memorize training data rather than learn the underlying correlations.

The standard process involves splitting data into three sections for training, validation and testing purposes. The model gets its training through data from the training set while the validation set optimizes its performance before evaluating its real-world capabilities through the test set.

One of the reliable approaches for data validation is named k-fold cross-validation. For instance, in 10-fold cross-validation:

- Split the data into 10 equal parts (folds).

- Use 9 folds for training and 1 fold for testing.

- Repeat the process 10 times, each time with a different fold as the test set.

- Calculate the average score across all folds—this gives a solid estimate of your model’s performance.

This approach helps minimize overfitting and ensures a more reliable evaluation.

Step 2: Choose Candidate Algorithms

Machine learning algorithms do not operate with a uniform programming approach. Different types of models exist for various problems which include:

- Linear Regression or Logistic Regression for simple, interpretable models.

- Random Forests or Gradient Boosting for complex data with lots of features.

- Support Vector Machines for high-dimensional data.

- Neural Networks for unstructured data like images or text.

Selection criteria may include:

- Size and quality of your dataset (e.g., adequacy of training data for machine learning, missing values, or noise).

- Required accuracy versus interpretability.

- Time constraints (some algorithms are slower to train).

- Number of input features.

- Linearity or non-linearity in your data.

Step 3: Tune Hyperparameters

Hyperparameters function as guidelines to direct model learning because they do not originate from the training data. These parameters established prior to training commencement significantly shape how the model performs.

Examples:

- For Lasso Regression, the strength of the regularization penalty must be set in advance.

- In a Random Forest, the number of trees is a hyperparameter, while the tree splits are learned during training.

Hyperparameter tuning can be done manually, through grid search, or using automated methods like random search or Bayesian optimization, addressing common machine learning challenges such as balancing performance and computational efficiency.

Step 4: Train and Refine Your Models

The next step involves model training now that you have chosen your algorithms alongside your defined hyperparameters. This involves:

- Splitting the dataset.

- Selecting an algorithm.

- Assigning hyperparameter values.

- Training the model and recording performance.

- Trying different algorithms or tuning hyperparameters to see which combination works best.

The system operates similarly to athletics qualifying rounds where algorithms compete in separate setups until winners advance to the final competition.

How is Data in ML Model Training Selected for Optimal Results?

1. Data Sources

The saying “garbage in, garbage out” rings especially true in the world of machine learning (ML). The success of an ML model strongly depends on the quality and comprehension of relevant diverse data used for learning. AI developers must select the right training data during their model development process as one of their main responsibilities.

Training data often isn’t readily available in perfect form. Although the adoption of precompiled datasets is on the rise yet the search for raw data continues to pose significant challenges. For example, establishing a model to identify distinct tree species demands substantial effort because obtaining thousands of picture samples from different seasons and perspectives would prove challenging.

Though there might be available raw data, it remains unfit for supervised learning unless it undergoes annotation processes. The model requires annotation to determine which patterns or features it should focus on. It requires precise and constant labeling because poorly labeled data tends to confuse the algorithm or reduce its training speed.

Some organizations build their own datasets by gathering and tagging data internally, but this can be costly and time-intensive. Alternatively, data could originate either from public repositories, academic datasets or crowdsourced platforms, underscoring the importance of knowing how to collect data for ml effectively. However, no matter where developers obtain their data, whether through internal efforts or partnerships with machine learning development companies, they need to guarantee its representative nature as well as its suitable structure for their project requirements.

2. Quality

High-quality data underpins model reliability. Bad prediction results frequently occur when there are common issues such as missing, incorrect entries and file corruption. This highlights the necessity of rigorous training data for machine learning models.

An example of such analysis exists in models examining customer review content. If many reviews are incomplete, written in different languages without translation, or full of typos, the model’s ability to detect sentiment will suffer.

The use of diverse datasets prevents bias while improving model generalization abilities. An ML system intended to detect human faces needs datasets featuring different ethnic backgrounds, ages, and lighting conditions; the dataset must include examples from all those categories.

3. Diversity and Bias

A dataset with predominantly adult male faces would create difficulties when identifying other facial types including female faces and children.

Data bias restricts model performance and reduces the achievements of fairness. For instance, a credit scoring model trained primarily on urban financial records might make flawed predictions for rural applicants. Diversity ensures the model doesn’t unfairly favor or overlook certain groups or scenarios.

4. Relevance

The training data should directly link to the problem which the model needs to address. If you’re building a system to detect phishing emails, using a dataset full of generic email conversations without any actual phishing examples would offer little value. Collaborating with machine learning development services can ensure datasets align with real-world threats and evolving attack patterns.

Relevance also ties into timeliness. An ML model which uses 2010 consumer data to make predictions will not work effectively today because shifts in consumer behavior and evolving technology have occurred since then. Up-to-date and context-specific data is vital.

How Data in ML Model Training Powers AI Systems

The fundamental role of training data is to instruct AI systems with machine learning methods about how to detect patterns and generate decisions that solve problems. By exposing an ML model to data in machine learning, AI algorithms can learn the underlying structures and relationships within it. With its acquired understanding the model demonstrates accuracy in predicting outcomes or classifying unknown cases.

There are three primary approaches to training ML models:

1. Supervised Learning

In supervised learning, the data used for training is labeled with correct answers. For example, a model learning to identify spam emails requires training on messages that human experts have labeled as either “spam” or “not spam.” Experts from the human workforce take an essential part in designing and optimizing data sets which requires their input at multiple stages of training to enhance model performance.

2. Unsupervised Learning

Unsupervised learning requires the model to process unlabeled data thereby discovering natural patterns independently much like providing a student with unsolved puzzles to determine the rules by themselves. A common example is machine learning for customer segmentation in marketing, where a model clusters users into different groups based on behaviors without being told in advance what those groups should be.

3. Semi-Supervised Learning

This method blends the two previous techniques. The model is given a small amount of labeled training data for machine learning along with a larger set of unlabeled data, helping it learn efficiently with less human input. It’s often used in scenarios where labeling is expensive or time-consuming such as medical imaging, where a few X-rays are labeled by doctors and the model learns to interpret a larger dataset.

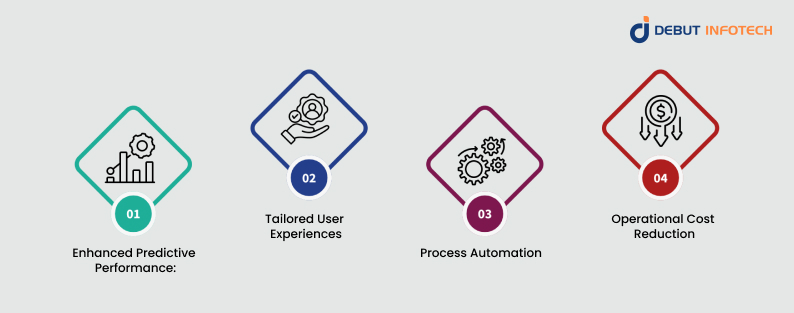

Benefits of Using Data in Machine Learning

- Enhanced Predictive Performance: With access to vast datasets, machine learning systems discover complex patterns which lead to improved prediction accuracy in operations like predicting customer churn or detecting fraudulent transactions.

- Tailored User Experiences: Machine learning uses data to generate personalized content that includes recommending movies on streaming platforms or tailoring shopping suggestions based on past behavior, thereby improving user engagement and satisfaction.

- Process Automation: Data-driven machine learning systems perform routine tasks which helps to improve workflow efficiency. Customers can access automated support through machine learning-driven chatbots which operate without human involvement. This is a practical application of NLP in Business.

- Operational Cost Reduction: Business organizations that implement ML for process automation can experience major decreases in operational costs. For example, the application of predictive maintenance in manufacturing helps businesses to determine equipment failure before breakdowns thus minimizing repair expenses and reduced facility downtime.

Key Obstacles in Using Data for Machine Learning

- Insufficient Data Volume: The effective operation of natural language processing understanding and image recognition applications depends on using massive information sets used in machine learning. In cases like medical diagnostics for rare diseases, the lack of enough patient data can hinder the development of reliable models.

- Ensuring Data Quality: Machine learning faces a primary challenge concerning the preservation of high-quality data which requires both accuracy and cleaning techniques and suitability for its intended usage. The predictions made by models can be affected negatively by missing records and incorrect entry labels as well as outdated information. For example, the use of defective financial transaction information leads to faulty fraud detection systems, a challenge often mitigated by collaborating with machine learning consulting firms to refine data pipelines.

- Model Generalization Issues: A model becomes overfit when it adjusts specifically for the training data to an extent that results in poor predictions on new data points. The model becomes too simple when it overlooks essential trends which leads to underfitting. For example, a spam filter that overfits may block legitimate emails, while an underfit one might miss actual spam.

- Privacy and Confidentiality Risks: The processing of machine learning information sets in specific models including healthcare records and location history creates privacy-related issues. For example, a model trained on user behavior might inadvertently reveal sensitive habits or identities if not properly anonymized or secured.

- Bias and Ethical Concerns: When the training data reflects historical or societal biases, models can inherit and even amplify these unfair patterns. For instance, a recruitment algorithm trained on past hiring data may unfairly favor male candidates if previous decisions were biased, leading to systemic discrimination.

Stuck in the Data Wilderness? Let’s Chart a Path Together

Even the best algorithms can’t fix messy data. If you’re wrestling with gaps, biases, or “garbage in, garbage out” anxiety, let’s talk. Our team lives for data puzzles and specializes in integrating solutions across Machine Learning Platforms to help yours unlock real ML magic.

Final Thoughts

Every machine learning model needs data as its essential foundation to determine how models learn, behave, and perform in the real world. While advanced algorithms often receive the attention, they will never outperform their training data which determines their execution quality. The accurate development of models depends on data quality, quantity, and ethics in order to build trustworthy artificial intelligence systems. As machine learning continues to impact every facet of our lives, a deeper appreciation for the role of data and staying updated with machine learning trends will remain key to its full potential.

Frequently Asked Questions (FAQs)

The training data must include the correct outcome, often referred to as the target or target variable. The machine learning algorithm analyzes the data to uncover patterns that link the input features to this target. Based on these patterns, it generates a model capable of making predictions for new, unseen data.

Data exists in various formats, but machine learning models typically work with four main types: numerical data, categorical data, time series data, and textual data.

A common rule of thumb suggests having a minimum of 10 training examples for each input feature or predictor in your model. So, if your model includes 10 features, you should aim for at least 100 labeled examples to ensure reliable training.

Machine learning and AI excel in two main areas: identifying patterns in data and making data-driven decisions. To carry out these tasks effectively, models require a reference point. Training data serves as this reference by setting a benchmark that allows models to evaluate and compare new data.

Training data in machine learning consists of labeled or annotated data used to “train” an algorithm or model, allowing it to learn how to perform a particular task and make precise predictions or decisions.

Talk With Our Expert

USA

2102 Linden LN, Palatine, IL 60067

+1-708-515-4004

info@debutinfotech.com

UK

Debut Infotech Pvt Ltd

7 Pound Close, Yarnton, Oxfordshire, OX51QG

+44-770-304-0079

info@debutinfotech.com

Canada

Debut Infotech Pvt Ltd

326 Parkvale Drive, Kitchener, ON N2R1Y7

+1-708-515-4004

info@debutinfotech.com

INDIA

Debut Infotech Pvt Ltd

Sector 101-A, Plot No: I-42, IT City Rd, JLPL Industrial Area, Mohali, PB 140306

9888402396

info@debutinfotech.com

Leave a Comment