Table of Contents

Home / Blog / AI/ML

Scalable AI Agent Architecture: Benefits, Challenges, and Use Cases

January 27, 2025

January 27, 2025

With their ability to carry out complex tasks independently and improve decision-making, AI agents are transforming the industry.

The global AI agents market is projected to grow from USD 5.1 billion in 2024 to USD 47.1 billion by 2030, reflecting a compound annual growth rate (CAGR) of 44.8%. In addition, a recent survey indicates that 51% of organizations are currently using AI agents in production, with mid-sized companies leading at 63%. Moreover, 78% of participants indicated their intention to implement AI agents in the near future. This shows the heavy dependence on intelligent systems.

These systems, which are designed to mimic human-like intelligence, span across numerous areas, including personalized recommendations and real-time data analysis. AI agent architecture, which is the structure that defines how these systems operate, is fundamental to their performance.

In this piece, we will define AI agent architecture, its types and components. We will also cover scalable AI agent architecture, why it’s crucial, and popular models and frameworks.

Why settle for average AI agents?

Build scalable AI agents designed for peak performance and endless adaptability. Let’s create something extraordinary together!

Understanding AI Agents

AI agents are intelligent systems that perceive their environment, decide, and take action to achieve specific goals. They operate independently or with little human input, utilizing technologies such as machine learning and natural language processing. These systems are a core part of modern AI development services, enabling businesses to improve efficiency and decision-making in various fields like customer support, healthcare, finance, and robotics.

Here are some benefits of AI agents:

- Efficiency: Automates repetitive tasks, streamlining workflows and saving time.

- Adaptability: Learns from interactions, adjusting to changing environments and user needs.

- Scalability: Efficiently manages increasing workloads and expanding data requirements without compromising performance.

- Cost-Effectiveness: Reduces operational expenses by optimizing resources and automating processes.

- Enhanced Decision-Making: Analyzes large datasets to deliver accurate insights, supporting informed and strategic decisions.

What Is an AI Agent Architecture?

AI agent architecture is the structured design and interlinking framework that empowers an agent to effectively perceive, process, and produce actions. It integrates numerous components such as sensors, memory, decision-making modules, and actuators. It defines how an agent interacts with its environment, what it learns from past interactions, and how it achieves its goals. All of these ensure that an agent can scale, learn efficiently, and adapt to new environments dynamically.

Key Components of an AI Agent Architecture

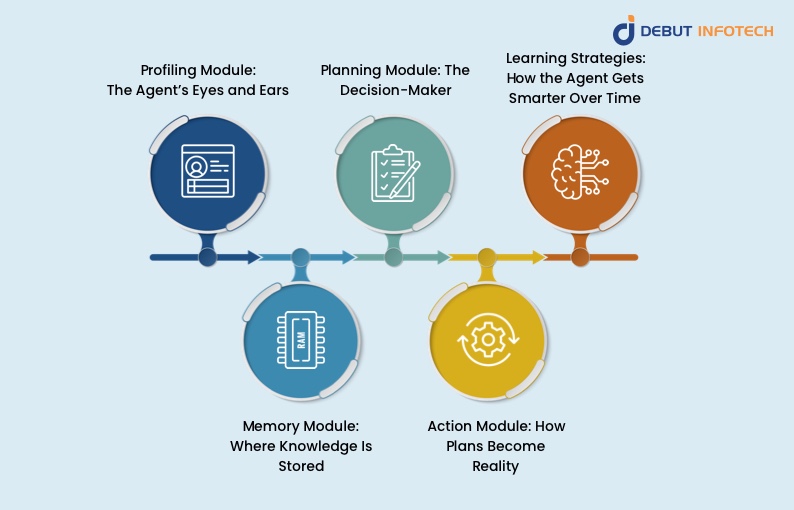

Here are the key components of an agent architecture in AI:

1. Profiling Module: The Agent’s Eyes and Ears

The profiling module allows an AI agent to observe and understand its surroundings. It collects sensory data across different modalities (e.g., cameras, microphones, sensors), enabling the agent to build a dynamic model of its environment. This data is vital for the agent to make a meaningful sense of events and actions, especially for decision-making purposes.

2. Memory Module: Where Knowledge Is Stored

The memory module stores accumulated knowledge and experiences, enabling the AI agent to make informed decisions. It acts as a long-term database, keeping track of past actions, events, and results. This module allows the agent to recall relevant information when needed and adjust its behavior based on previous outcomes, improving efficiency and adaptability over time.

3. Planning Module: The Decision-Maker

It is the job of the planning module to analyze the goals of the agent and plan the best course of action to take to accomplish them. It considers multiple scenarios, weighs possible outcomes, and formulates strategies to respond to challenges. The planning module takes into consideration the available resources and constraints to make sure that the actions taken by the agent are meaningful and by the original goals the agent may have.

4. Action Module: How Plans Become Reality

The action module executes the plans generated by the planning module. It translates abstract strategies into concrete actions, using effectors like motors or digital interfaces to interact with the environment. This component ensures that the agent’s decisions result in tangible results, enabling it to carry out complex tasks, from navigating a physical space to executing software processes.

5. Learning Strategies: How the Agent Gets Smarter Over Time

Learning strategies help AI agents improve their decision-making skills by adapting based on feedback from interactions and experiences. These techniques usually use machine learning algorithms, like reinforcement learning or supervised learning, enabling agents to improve their actions gradually.

Partnering with firms providing AI consulting services can help organizations implement these advanced learning strategies, ensuring the agent becomes more efficient, accurate, and well-rounded by learning from both its successes and mistakes.

Types of AI Agent Architectures

1. Reactive Architectures

Reactive architectures focus on immediate responses to environmental stimuli without needing internal models or memory. These agents rely on simple rules or behaviors to react to changes in real-time. While effective for specific tasks, reactive agents may struggle with complex, long-term objectives or dynamic environments.

2. Deliberative Architectures

Deliberative architectures are more sophisticated, involving reasoning and planning to make informed decisions. These agents build internal models of their environment, consider various options, and choose the best course of action. They excel in complex environments but may require more computational resources and time for decision-making compared to reactive agents.

3. Hybrid Architecture

The hybrid architecture combines elements from both reactive and deliberative models. It aims to balance quick, reflexive responses with the ability to plan for more intricate tasks. By integrating these approaches, hybrid agents can handle both immediate and long-term challenges effectively, providing greater flexibility and adaptability in dynamic situations. Organizations often choose to hire artificial intelligence developers to create such advanced systems, ensuring optimal performance in diverse and evolving environments.

4. Layered Architectures

Layered architectures divide the agent’s tasks into distinct layers, each responsible for specific functions like perception, decision-making, and action. These layers work independently but are coordinated to ensure the agent responds appropriately. This modular approach allows for easier adjustments and upgrades without disrupting the overall system’s performance.

5. Cognitive Architecture

Cognitive architecture mimics human-like thought processes and learning mechanisms. These agents focus on problem-solving, memory, attention, and decision-making based on cognitive models. Cognitive architectures aim to create more flexible and adaptable agents capable of handling complex, dynamic environments by simulating human reasoning and intelligence in their design and functioning.

Understanding Scalable AI Agent Architecture and why it is crucial

Scalable AI agent architecture focuses on creating systems capable of adapting to increasing demands without compromising performance. These architectures are essential for meeting the challenges of modern data-intensive environments and diverse application scenarios. However, collaborating with an experienced AI agent development company can ensure the successful design and implementation of these robust systems.

Here are some reasons why scalable AI architecture is important:

1. Handling Large Datasets

Scalable AI architectures are essential for processing large datasets efficiently. As data generation accelerates, AI systems need the capacity to handle and analyze vast amounts of information quickly. Scalable solutions ensure that performance remains optimal, even as the volume and complexity of data continue to grow exponentially.

2. Supporting Diverse Applications

Scalable architectures provide flexibility to adapt to various use cases. Whether an AI system is tasked with simple tasks or complex problem-solving scenarios, scalable architecture can accommodate different needs. This adaptability allows organizations to deploy AI solutions across a wide range of industries, from healthcare to logistics, without limitations on functionality.

3. Ensuring Performance

Performance is a key consideration in scalable AI architecture. A scalable system maintains its operational efficiency and output quality, even as demand and workload increase. By distributing resources dynamically, scalable AI systems can ensure that performance remains consistent, minimizing delays and optimizing throughput, thus supporting the demanding nature of real-time applications.

4. Facilitating Integration

Scalable architectures can readily integrate with new systems and technology. With the AI ecosystem continually changing, integrating these tools (including AI tools) and platforms should be seamless and easy. A scalable architecture enables these integrations, providing AI systems the ability to add new functions, improve performance, and adapt to innovative technologies with little need for major redesigns or disruptions.

5. Cost-Effectiveness

Investing in scalable AI solutions helps reduce long-term costs. Scalable architectures reduce the need for frequent system overhauls or replacements as demand grows. By optimizing resources and maintaining performance across varying workloads, scalable systems ensure a more cost-effective approach to meeting future needs, offering better value over time.

Scalable AI Agent Architectures: Popular Models and Frameworks

1. Microservices Architecture for AI Agents

Key Features

a) Decentralization

Microservices architecture breaks down complex AI systems into smaller, independent components. This decentralization enables greater flexibility, faster updates, and the ability to scale individual services as needed.

b) Scalability

Each microservice can scale independently based on demand. This ensures that resource-intensive components can handle high loads while lighter services operate efficiently, improving overall system performance.

c) Technology Diversity

Microservices architecture allows for diverse technologies to be used across different services. This flexibility ensures that the best tools for each specific function can be leveraged, optimizing efficiency.

Benefits for AI Agents

a) Rapid Development

By using independent microservices, adaptive AI development company can be done in parallel, reducing the time needed to deploy new features and updates. Teams can focus on specific services, enhancing productivity.

b) Fault Isolation

Microservices enable the isolation of faults to individual services. If one service fails, others remain unaffected, ensuring the system continues to function while troubleshooting or fixing the issue.

c) Easier Maintenance

With modular services, each can be updated, tested, or replaced without impacting the entire system. This makes maintenance simpler and less risky, promoting long-term sustainability.

Examples of Microservices in AI

a) Natural Language Processing (NLP)

In NLP applications, a microservice dedicated to text analysis can operate independently from other services like user interaction handling. This separation allows for specialized optimization, making the text processing more efficient without affecting other components like response generation or sentiment analysis.

b) Data Processing

Microservices can be used for specific data tasks, such as ingestion, cleaning, and transformation. By isolating these functions, each service can be optimized for its role, enabling efficient data handling and improving the quality of the final dataset used by the AI model.

2. Event-Driven Architecture in AI Systems

Event-driven architecture (EDA) is a design approach centered around producing, detecting, and reacting to events. In this setup, components communicate by triggering events, which then initiate actions or workflows.

Key Features

a) Asynchronous Communication

In an event-driven architecture, components communicate through events asynchronously. This allows systems to process multiple tasks concurrently without waiting for responses, improving overall responsiveness and reducing bottlenecks in AI workflows.

b) Loose Coupling

Services in event-driven architecture are loosely coupled, meaning they can operate independently. This allows for greater flexibility, easier updates, and the ability to add or modify components without disrupting the system’s performance or functionality.

c) Real-Time Processing

EDA is ideal for applications requiring immediate action or responses. It processes events as they occur in real time, making it well-suited for tasks like data analysis, alert generation, and decision-making. This capability is particularly valuable in areas like generative AI development, where real-time processing enhances user experience and system efficiency by enabling dynamic and responsive interactions.

Benefits for AI Agents

a) Scalability

Event-driven architecture supports scaling because services can operate independently. As demand grows, new services can be added without affecting existing processes, ensuring that the AI system can grow while maintaining optimal performance.

b) Dynamic Workflows

EDA allows for flexible and dynamic workflows, where tasks are initiated by events rather than pre-defined schedules. This adaptability ensures that AI systems can respond to changing conditions or requirements in real time, making them more responsive.

c) Improved User Experience

By responding to events in real time, AI systems can provide immediate feedback, leading to a more interactive and efficient user experience. This is particularly beneficial in applications like chatbots or real-time analytics.

Examples of Event-Driven Applications in AI

a) Chatbots

A chatbot, part of conversational AI, is powered by event-driven architecture. They can process user inputs as events and provide responses in real-time. This ensures quick and personalized interaction, improving customer service and engagement.

b) Predictive Maintenance

In predictive maintenance systems, sensors generate events based on equipment performance data. The event driven architecture enables AI systems to detect potential failures in real time and schedule maintenance proactively, minimizing downtime and reducing operational costs.

3. Lambda Architecture for Real-Time AI Processing

Lambda Architecture merges batch and real-time processing to address massive data handling.

Components of Lambda Architecture

- Batch Layer: Manages master datasets and processes large-scale data batches for comprehensive insights. Commonly utilizes Hadoop or Spark.

- Speed Layer: It focuses on real-time data and leverages tools like Apache Storm or Flink for quick updates and analytics.

- Serving Layer: Merges output from batch and speed layers to present unified, query-ready data views using robust databases like Cassandra.

Advantages

a) Combines Batch and Real-Time Processing

Lambda architecture merges the reliability of batch processing with the agility of real-time analytics, providing a holistic view of data and supporting both long-term and immediate decision-making.

b) Fault Tolerance

The architecture ensures system reliability by maintaining separate layers for batch and real-time processes. Failures in one layer do not impact the functionality of others, enhancing system robustness.

c) Scalability

With its distributed design, Lambda architecture can handle growing data volumes and increasing complexity, making it suitable for applications requiring significant computational resources.

Use Cases

a) Fraud Detection

Lambda architecture analyzes transaction data in real-time while cross-referencing historical patterns, enabling quick identification of fraudulent activities and preventing financial losses.

b) Real-Time Recommendation Systems

E-commerce platforms use this architecture to provide users with tailored recommendations by processing live interactions and combining them with historical preferences for personalized experiences.

IoT Monitoring Systems: For IoT applications, Lambda architecture supports real-time monitoring and alert generation by processing sensor data instantaneously while retaining comprehensive records for long-term analysis.

4. Kappa Architecture: Streamlining AI Data Processing

Kappa AI agent architecture provides a streamlined approach to data processing by focusing entirely on stream processing. It eliminates the dual-layer complexity of the Lambda Architecture, making it easier to manage, scale, and adapt to dynamic data requirements. This architecture is particularly suited for applications where real-time insights are essential for decision-making.

Key Features

a) Single Processing Layer:

All data flows through a unified stream processing pipeline, simplifying architecture and operations. Without batch layers, it reduces latency, ensuring faster response times and a more efficient data flow for time-sensitive applications.

b) Event Sourcing

Kappa Architecture captures all changes as a sequence of events, preserving the history of modifications. This approach allows for reprocessing data efficiently when algorithms are updated, or new insights are required, ensuring adaptability and accuracy.

c) Real-Time Analytics

It supports continuous querying of incoming data, delivering immediate insights and powering real-time dashboards. This enables businesses to act swiftly on dynamic trends, enhancing operational efficiency and decision-making capabilities.

Advantages

a) Simplified Pipeline

By eliminating separate batch processes, Kappa Architecture reduces the operational complexity and overhead associated with data management. It streamlines system maintenance while ensuring reliability.

b) Reduced Maintenance

The unified architecture minimizes the need to manage multiple layers, lowering maintenance requirements and costs. This simplicity allows teams to focus on enhancing system performance rather than dealing with infrastructural intricacies.

c) Agility

Kappa Architecture supports rapid responses to evolving data scenarios. Its flexibility allows organizations to adapt quickly, ensuring competitive advantages in dynamic industries.

Use Cases

a) Real-Time Social Media Analytics

Social media platforms leverage Kappa Architecture to analyze user activity, AI trends, and engagement metrics in real-time, enhancing user experience and content delivery.

b) Network Security Monitoring

By processing data streams from network activities, it enables the detection of threats and anomalies as they occur, improving response times and reducing risks.

c) Dynamic Pricing Models

Travel and hospitality industries use Kappa Architecture for real-time pricing adjustments. This approach integrates live market data, consumer demand, and availability, ensuring competitive pricing strategies.

Key Challenges in Designing Scalable AI Agents

1. Data Management:

Handling large datasets requires robust storage solutions and efficient data pipelines. Ensuring data quality, relevance, and security becomes increasingly complex as the scale and diversity of information grow.

2. Computational Resources:

Scalable AI agents demand high-performance infrastructure to handle intensive tasks. Striking a balance between system performance, energy consumption, and cost efficiency remains a critical challenge as computational needs increase.

3. Integration with Existing Systems:

Seamless integration with legacy systems can be challenging due to compatibility issues. Ensuring interoperability and minimal disruption during deployment requires careful planning and robust interface designs.

4. Scalability of Learning:

AI agents need mechanisms for continuous learning at scale. Adapting to new data without compromising prior knowledge demands advanced learning frameworks and optimization techniques. Only top-notch AI development companies can meet these demands.

5. Ethical Considerations:

Ensuring fairness, accountability, and transparency becomes more critical as AI agents gain autonomy. Addressing biases in training data and decision-making processes is essential to build trust and credibility.

Ready to Build Extraordinary AI Solutions?

Let our experts develop AI agents that adapt, learn, and perform under any demand.

Conclusion

Scalable AI agent architectures have become key enablers of innovation and efficiency as AI disrupts industries. Organizations can leverage well-defined components, modular architectures, and real-time processing integrated with intelligent systems to suit dynamic needs. By understanding the principles and addressing challenges related to AI agent design, we can help ensure these systems are reliable, ethical, and user-focused while paving the way for advanced solutions that will drive growth and deliver measurable value.

FAQs

Not at all! It’s also about managing more users, tasks, or different functions without slowing down or breaking down. Scalability ensures that AI adapts to growing workloads across the board.

Initially, maybe a bit more. But in the long run, the AI development cost saves money since you won’t need expensive overhauls to keep up with demand. It’s an investment that pays off as your system grows.

Absolutely! Even small projects can benefit from scalability. Starting with scalable architecture means you’re ready for future growth without constant redesigns. It’s like future-proofing your project from the start.

Industries like healthcare, finance, and e-commerce rely on scalable AI to process massive data, serve users globally, and adapt to growing demands. Basically, anywhere AI needs to perform without hiccups, scalability plays a big role.

It’s all about designing modular systems, using cloud solutions, and optimizing algorithms. You start small but ensure everything can expand effortlessly. It’s like building with Lego blocks—you can keep adding pieces without toppling the structure.

Talk With Our Expert

Our Latest Insights

USA

2102 Linden LN, Palatine, IL 60067

+1-703-537-5009

[email protected]

UK

Debut Infotech Pvt Ltd

7 Pound Close, Yarnton, Oxfordshire, OX51QG

+44-770-304-0079

[email protected]

Canada

Debut Infotech Pvt Ltd

326 Parkvale Drive, Kitchener, ON N2R1Y7

+1-703-537-5009

[email protected]

INDIA

Debut Infotech Pvt Ltd

C-204, Ground floor, Industrial Area Phase 8B, Mohali, PB 160055

9888402396

[email protected]

Leave a Comment