Table of Contents

Home / Blog /

The Ultimate Guide to Model Validation in Machine Learning

April 23, 2025

April 23, 2025

Can an AI model truly be effective if you don’t know how well it performs?

That’s where model validation comes in! Model validation stands as the essential core that supports the success of AI and machine learning projects. The evaluation process goes beyond testing model functionality but ensures it delivers solutions that align with operational business needs. The process includes model validation techniques such as assessing prediction accuracy, examining how data sources, tools, and design components influence the model.

ML governance requires model validation as a core practice to maintain transparency, control, and regulatory compliance across the model development cycle. This is especially critical for addressing broader machine learning challenges, such as algorithmic bias or scalability issues, which can derail real-world deployment.

In this article, we’ll explore what model validation really means, its essential role, and the process through which you can create trustworthy AI systems for real deployment.

What is Model Validation?

Model validation stands as a crucial developmental element for all machine learning and AI systems. The validation process ensures both correct model behavior and ability to process and handle new, unseen data.

A thorough validation becomes necessary to determine if the model will operate effectively outside its training dataset boundaries. The validation process enables teams to determine which model works best and optimize parameters and key performance metrics for their particular task.

Furthermore, the process of validation enables developers to detect system flaws at an early stage, which minimizes the risk of problems becoming more serious. Various validation techniques help users compare their models so they can choose the most efficient option. By exposing the model to actual real-world data, the accuracy level becomes apparent through this method.

The model validation process usually requires involvement from a separate team, or independent third party, or specialized machine learning consulting firms to ensure objectivity. The unbiased process enables standards compliance while users develop trust in the model’s reliability through verification.

Struggling to trust your ML model’s performance?

We turn uncertainty into confidence. Our team implements rigorous validation frameworks to measure accuracy, eliminate bias, and ensure your models deliver real-world reliability. No guesswork—just tailored strategies that make your AI work smarter.

Types of Machine Learning Models and Their Validation Needs

1. Supervised Learning Models

Supervised learning models use labeled data to generate predictions as their primary purpose. Common supervised learning models include linear regression, logistic regression, support vector machines (SVMs), decision trees, random forests, and artificial neural networks (ANNs).

The validation approach varies depending on the model type:

- Linear and Logistic Regression: These models need to be tested for overfitting and underfitting to guarantee their ability to perform well with new data.

- Support Vector Machines (SVMs): The validation process divides data into training sets for model training and independent test sets for evaluating performance.

- Decision Trees and Random Forests: The evaluation process for these models requires data separation into training and test sets, just like SVM models.

- Artificial Neural Networks (ANNs): Separate validation sets complement training and test sets as researchers use them for model validation to determine and optimize alternative model configurations during training.

2. Unsupervised Learning Models

Unsupervised learning models discover hidden patterns within datasets through predefined labels, a key contrast in supervised learning vs unsupervised learning methodologies. Common types of unsupervised learning methods include Principal Component Analysis (PCA) for dimensionality reduction, Latent Dirichlet Allocation (LDA) for topic modeling, association rule learning and autoencoders.

The validation approach depends specifically on the targeted use case. For instance, the evaluation of dimensionality reduction methods happens through analysis of explained variance ratios and reconstruction errors. In the case of topic model, assessments frequently rely on both coherence scores together with perplexity measurements to determine model effectiveness.

Association rule learning requires data validation through measures such as support, confidence and lift to authenticate discovered patterns. On the other hand, Autoencoders evaluate their performance using test data reconstruction loss or mean squared error measurements.

3. Hybrid Models

A hybrid model in machine learning combines distinct methods including decision trees and neural networks, for improved predictive accuracy. It employs multiple models to exploit their unique strengths and produce outcomes that surpass the performance of single models.

A thorough machine learning validation process is required to verify the dependability and consistency of hybrid models. The hybrid model’s performance is evaluated during validation using untapped data from independent test sets to determine its accuracy and ability to handle new scenarios.

The success of models in real-world situations depends heavily on validation tests because they reveal whether a model truly learns from data rather than memorizing it (overfitting) or failing to extract meaningful patterns (underfitting).

Furthermore, model validation enables identification of concealed problems from pre-processing steps, which include data leakage as well as bias artifacts. This process enables proper modifications that will strengthen model reliability and fairness.

4. Random Forest Models

Random forest serves as an ensemble learning technique which builds multiple decision trees through training data to enhance prediction accuracy while decreasing prediction variability. It proves essential for model validation because it helps prevent overfitting by enhancing performance when dealing with new observations.

The random forest algorithm builds multiple trees through random features and training data samplings instead of using a single decision tree. For example, in a medical diagnosis application, individual trees predict disease risks through analysis of patient symptoms and historical data. The final prediction occurs by combining predictions across all trees through majority voting for classification and averaging for regression.

This approach produces generalized models that resist data anomalies effectively for real-world usage, such as customer churn prediction and loan default risk assessment, leveraging machine learning in Business Intelligence. As a result, the model delivers reliable results during evaluation using validation methods, including cross-validation and hold-out testing.

5. Support Vector Machines

Support Vector Machines (SVMs) serve as popular machine learning models that excel at validation tasks by creating optimal class separation margins in data sets.

Through the analysis of the optimal hyperplane, SVM models achieve effective classification by clearly separating data points between distinct classes.

Beyond their classification functions, SVMs demonstrate capabilities for spotting outliers, detecting complex relationship patterns, and resolving regression and classification problems. This versatility makes them a powerful and popular choice across various machine learning applications. Understanding the distinction between AI vs Machine Learning further contextualizes how SVMs fit into broader artificial intelligence frameworks while excelling in specialized ML tasks

6. Deep Learning Models

Deep learning techniques function as a robust artificial intelligence subfield which demonstrates capabilities across multiple functions, including:

- Image recognition

- Natural language processing

- Autonomous driving

The successful operation of these models requires validation to meet effectiveness standards. Model validation is required prior to use of the system to ensure the model successfully completes its assigned tasks such as object recognition, data classification and predictive tasks.

The convolutional neural network (CNN) serves as a widely applied deep learning model which operates for image classification applications. Performance validation of the CNN depends on evaluating it with datasets including labeled images to verify its correct visual recognition and categorization abilities.

Another key model is the Recurrent Neural Network (RNN), that serves as a fundamental model which voice assistants employ for understanding spoken commands. The performance validation of RNN involves testing against extensive databases of speech recordings to verify its ability to process sequences effectively.

The field of robotics utilizes reinforcement learning models to manage tasks including warehouse automation. The models undergo validation through controlled testing and simulations to demonstrate their real-time adaptation capabilities for optimal decision making in dynamic scenarios.

7. Clustering Models

The usefulness and accuracy of clustering models depends on appropriate validation for generating meaningful results.

When working with this technique, there are a couple of requirements that must be met, including:

- Evaluating the quality of the generated clusters

- Comparing outcomes across different clustering algorithms

- Analyzing the consistency of clusters across multiple runs

- Testing the model’s ability to scale with larger datasets

It is important to thoroughly examine the machine learning model’s results to verify both data accuracy and result reliability.

8. k-Nearest Neighbors Model

The k-Nearest Neighbors (KNN) algorithm functions as a supervised learning method which serves both classification and regression purposes. It stands out as a preferred choice to validate model performance because of its simplicity and ease of implementation.

The KNN algorithm finds k nearest data points to each sample and gives the sample the most represented label class among its nearest neighbors. This allows the model to function by producing predictions without needing data pre-training during the training process.

The simplicity of KNN, compared to other models, makes it an ideal selection for model validation processes. Its non-parametric nature allows K-Fold cross-validation to function without dependance on feature quantity or input dataset size for effective new prediction data assessment.

9. Bayesian Models

Bayesian models serve as probabilistic frameworks which use Bayes’ theorem to assess hypothesis probabilities through analyzed datasets. These predictive models depend on both scientific expertise and the assumptions made by the data scientist. They function as tools to determine predictive distributions for unknown variables.

There are three main types of Bayesian models: Bayesian parameter estimation models, Bayesian network models, and Bayesian non-parametric models.

- Bayesian parameter estimation models help determine probabilistic models’ uncertain or unknown parameters through systematic parametric estimation. They identify parameters through posterior distribution evaluation based on collected data.

- Bayesian network models are probabilistic graphical models show the interconnections between variables. These models determine the value of one variable through known values of other variables within the system.

- Bayesian non-parametric models function as probabilistic models which avoid making any hypothesis regarding a distribution’s core characteristics. These models provide statistical estimates about hypotheses without requiring knowledge of distribution parameters.

The application of Bayesian models proves highly successful for understanding complex systems as well as predicting behaviors from collected observations. Multiple fields including machine learning development services, artificial intelligence, and medical research use these models extensively.

10. Neural Network Models

Neural network models are machine learning systems that autonomously learn and implement decisions. Their operation does not depend on fixed parameters nor previous knowledge. The successful execution of these systems depends on meeting particular requirements combined with validation checks.

To operate effectively, these models require extensive training with large datasets that encompass a broad and accurate representation of the target data. If the training data differs from real-world data, the model may produce inaccurate results.

In addition, the model requires testing with various input conditions and configuration settings to ensure operational reliability. Finally, the evaluation process should involve model validation through performance metrics such as accuracy, precision, and F1 scores to measure model success and detect weak points.

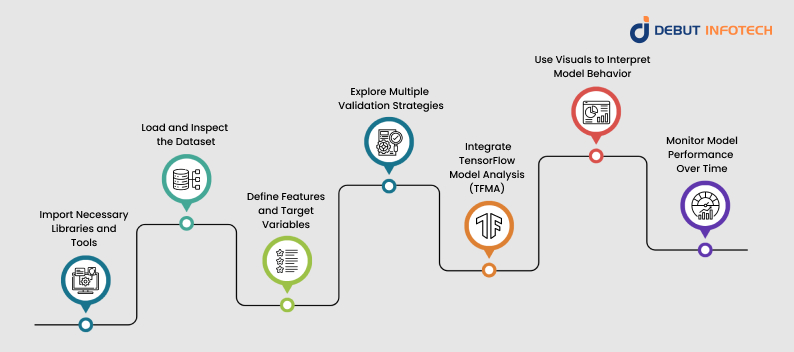

How to Validate Machine Learning Models: A Step-by-Step Guide

Step 1: Import Necessary Libraries and Tools

Before starting model validation, it’s important to import relevant Python libraries and tools such as:

- Pandas for data manipulation

- Numpy for numerical computations

- Seaborn and Matplotlib for visualization

- Scikit-learn modules like train_test_split, cross_val_score, accuracy_score, and various model evaluation techniques

- Classification models such as DecisionTreeClassifier, RandomForestClassifier, or SVC

- Validation tools such as StratifiedShuffleSplit, GroupKFold, or RepeatedKFold

It’s also helpful to have a basic understanding of ML workflows using Apache Spark, Jupyter Notebook for experimentation, and GitHub for version control and collaboration, especially when scaling projects on modern Machine Learning Platforms.

Step 2: Load and Inspect the Dataset

Start by loading the dataset using Pandas or reading from a cloud source like Google Drive or AWS S3. Once loaded, perform exploratory data analysis (EDA), which includes:

- Checking data types and structure with df. info

- Identifying missing or duplicate values

- Understanding feature distributions using histograms or box plots

- Detecting outliers and possible data imbalances

This step ensures the dataset is clean and structured properly for the next steps.

Step 3: Define Features and Target Variables

Once your dataset is verified, prepare the features and target variables for the model:

- Define X as the feature matrix by selecting relevant columns. For example: X = df.drop(“churn”, axis=1).values

- Define y as the target array: y = df[“churn”].values

This formatting allows the model to be trained and evaluated correctly.

Step 4: Explore Multiple Validation Strategies

Beyond the basic train/test split, there are advanced machine learning validation methods to improve model reliability:

- Group K-Fold: Especially useful when you have grouped data like multiple transactions per customer.

- Leave-One-Group-Out (LOGO): Ideal for time-series or grouped observations.

- Repeated Stratified K-Fold: Applies stratified k-fold multiple times to account for variance in splits.

- Bootstrapping: Resamples the data with replacement to estimate performance.

Evaluation techniques can also include:

- Confusion Matrix: Evaluates classification performance with TP, TN, FP, FN.

- ROC Curve and AUC Score: Measures model performance at various classification thresholds.

- Gain/Lift Charts: Visualizes how much better the model performs compared to random guessing.

Step 5: Integrate TensorFlow Model Analysis (TFMA)

If you’re using TensorFlow/Keras for deep learning in predictive analytics, TFMA provides in-depth validation tools:

- Import tensorflow_model_analysis

- Define TFMA.EvalConfig with your metrics and slicing specifications

- Use tfma.EvalSharedModel to link your trained Keras model

- Run analysis with tfma.run_model_analysis()

- Visualize results using tfma.view.render_slicing_metrics()

This helps analyze model performance across different feature slices.

Step 6: Use Visuals to Interpret Model Behavior

Visualization can significantly enhance your understanding of model performance:

- Heatmaps for correlation analysis

- SHAP values to interpret feature importance

- Time-series plots to show model predictions over time

- Residual plots to diagnose prediction errors

These tools reveal patterns, biases, and potential improvement areas.

Step 7: Monitor Model Performance Over Time

Once deployed, it’s critical to track the model’s accuracy and behavior across time:

- Log metrics such as accuracy, precision, and recall

- Use dashboards (e.g., with MLflow or TensorBoard) to visualize changes

- Set performance benchmarks and alerts for model drift

- Periodically retrain the model using updated datasets

Continuous monitoring ensures the model adapts to real-world changes and remains effective.

Data Validation vs. Model Validation in Machine Learning

Data validation is a preprocessing step that ensures input data is accurate, consistent, and representative before training a machine learning model. This process deals with handling missing values, detecting outliers, data type verification and checking that feature distributions match expectations. This step prevents future training complications and enhances model dependability.

Model validation, on the other hand, serves as the assessment method which evaluates trained models’ performance. It includes techniques like train/test splits, cross-validation and measurement tools including accuracy, precision, recall and RMSE.The goal of this procedure is to evaluate the model’s capability to perform well with unknown data.

In essence, data validation focuses on maintaining input quality while model validation checks output quality. They both act as essential components for developing strong ML systems, a priority for machine learning development companies aiming to deploy scalable and trustworthy AI solutions.

Benefits of Implementing Proper ML Model Validation

Ensures High-Quality Output: The implementation of validation processes throughout the machine learning pipeline guarantees consistent output of high-quality results.

Improves System Management: ML system maintenance and management becomes possible through validation processes throughout all stages of system development.

Builds Stakeholder Confidence: System validation results that are reliable enable external stakeholders to ensure proper and accurate functioning of the systems.

Enhances Transparency: The Validation process brings transparency that gives trust to both internal personnel and external parties.

Maximizes Business Value: ML systems gain increased business confidence when their pipeline has received validation to confirm the delivery of dependable and valuable outputs.

Maintains Production-Ready Models: The validation process identifies models that satisfy established performance benchmarks before placing them into production.

Key Challenges and Best Practices in ML Model Validation

Machine learning models need proper validation to achieve reliability and high performance. However, there are multiple common issues that both data scientists and ML engineers need to carefully handle. Understanding these issues alongside proven best practices allows teams to strengthen their validation procedures while improving their model quality.

Here are some of the key challenges and practical ways of solving them:

1. Data Leakage: The inclusion of test set data during training leads to inflated performance metrics because of incorrect information.

- Best Practice: Ensure that the test set must remain entirely independent from training procedures with cross-validation methods applied to avoid data contamination. Proper structuring of the validation model is critical to maintaining integrity in results.

2. Overfitting to the Validation Set: Model performance becomes compromised when validation-based adjustments are made repeatedly because this leads to overfitting which limits the model’s generalization capabilities.

- Best Practice: To prevent overfitting while ensuring good generalization, the model must be validated using a distinct set or through cross-validation methods.

3. Neglecting Data Quality Issues: The validation process fails when developers neglect to address missing data, extreme values or inconsistent data points in the validation set.

- Best Practice: Data cleaning procedures need to address missing values, outliers and inconsistent elements before training to create a valid validation set.

4. Ignoring Real-World Scenarios: This refers to validating models under ideal conditions which ignore the complex deployment requirements of real-world environments.

- Best Practice: Introduce real-world conditions through validation simulations by implementing noise patterns, system variations, and marginal situations that will be encountered in actual deployment scenarios. This aligns with machine learning trends prioritizing robustness in dynamic, unpredictable environments.

5. Bias and Fairness Gaps: This refers to the failure of models to detect and fix bias patterns that appear when making predictions for different demographic groups and protected characteristics.

- Best Practice: Conduct regular bias assessments through model performance analysis across diverse demographic categories to guarantee ethical and fair practices.

Model validation shouldn’t feel like a gamble. Let’s collaborate.

Schedule a consultation with our ML specialists to refine your approach, tackle hidden flaws, and build frameworks that stand up to scrutiny. Together, we’ll make sure your AI works as hard as you do.

End Note

Validating machine learning models is essential for ensuring their reliability. By avoiding pitfalls like data leakage and overfitting, and applying best practices such as cross-validation and addressing biases, teams can build robust models that perform well in real-world scenarios.

Frequently Asked Questions (FAQs)

Validation of an ML model involves evaluating its performance to ensure it can generalize well to new, unseen data. This process helps detect and address overfitting—a situation where the model performs well on training data but struggles with unfamiliar data due to being too closely tailored to the training set.

Data model validation is the process of checking and confirming that the collected data is accurate, consistent, and suitable for use before it is processed or analyzed.

Beyond just the numerical outcomes, model validation also considers how results are formatted and presented. It ensures that the results are communicated clearly and accurately, avoiding any potential for misleading the user. Additionally, if a comparable model exists, validating involves comparing outputs to ensure consistency and reliability.

Talk With Our Expert

Our Latest Insights

USA

2102 Linden LN, Palatine, IL 60067

+1-708-515-4004

info@debutinfotech.com

UK

Debut Infotech Pvt Ltd

7 Pound Close, Yarnton, Oxfordshire, OX51QG

+44-770-304-0079

info@debutinfotech.com

Canada

Debut Infotech Pvt Ltd

326 Parkvale Drive, Kitchener, ON N2R1Y7

+1-708-515-4004

info@debutinfotech.com

INDIA

Debut Infotech Pvt Ltd

Sector 101-A, Plot No: I-42, IT City Rd, JLPL Industrial Area, Mohali, PB 140306

9888402396

info@debutinfotech.com

Leave a Comment