Table of Contents

Home / Blog / AI/ML

LLM Comparison: A Comparative Analysis In-Depth

February 7, 2025

February 7, 2025

The need for highly sophisticated natural language processing is booming, and the arrival of large language models (LLMs) marks a major turning point in this area. AI technology is progressing at a breakneck pace, and LLMs are transforming how we work with text, giving us new ways to communicate, analyze, and create content that is far more advanced than ever before.

In this LLM comparison, we’ll analyze top models like GPT-4, Claude 2, Gemini, LLaMA, Mistral, and Deepseek comparing their capabilities, performance, and ideal applications. Whether you’re a business looking for AI-driven automation, a developer exploring open-source options, or a researcher needing advanced reasoning capabilities, this guide will help you make an informed decision.

Let’s dive into a side-by-side comparison of today’s leading LLMs.

What Are Large Language Models and Why Do They Matter?

Large Language Models (LLMs) are advanced artificial intelligence systems trained on vast amounts of text data to understand, generate, and process human language. Built on deep learning architectures, primarily transformers, these models can perform a wide range of natural language processing (NLP) tasks, including text generation, translation, summarization, and even code writing.

When conducting an LLM comparison, it’s clear that their versatility and scale make them indispensable tools for modern businesses and developers.

How Do Large Language Models Work?

LLMs are powered by neural networks, particularly Transformer architectures (e.g., OpenAI’s GPT-4, Google’s Gemini, and Meta’s LLaMA). These models are trained on massive datasets containing books, articles, websites, and other textual sources. Through a process called self-supervised learning, they learn patterns, context, and semantics, enabling them to generate human-like responses and complete tasks with high accuracy.

For businesses working with artificial intelligence technology, LLMs can automate complex workflows while ensuring precision.

Some key characteristics of LLMs include:

- Massive Scale – They contain billions (or even trillions) of parameters, making them incredibly powerful.

- Context Awareness – They analyze and predict words based on context, improving coherence and relevance.

- Multimodal Capabilities – Some modern LLMs can process text, images, and even audio.

- Fine-tuning & Customization – Many LLMs allow LLM development companies to fine-tune models for specialized tasks.

Why Do LLMs Matter?

LLMs have revolutionized industries by enhancing automation, improving efficiency, and enabling new AI-powered applications. Here’s why they are important:

- Transforming Content Creation – LLMs power AI-driven writing tools, chatbots, and digital assistants, making content generation faster and more scalable.

- Enhancing Customer Support – AI chatbots and virtual assistants provide 24/7 support, reducing human workload.

- Driving Innovation in Research & Development – LLMs assist in scientific research, medical diagnosis, and data analysis by processing vast amounts of information.

- Empowering Developers – Coders use LLMs like GitHub Copilot and ChatGPT to generate and optimize code.

- Boosting Business Productivity – Companies use LLMs for automating emails, generating reports, and streamlining workflows.

As AI technology continues to evolve, LLMs will play an even greater role in shaping the future of communication, automation, and decision-making across various fields including startups.

Eager to Know How LLMs Can Transform Your Business?

Discover how LLMs can transform your business with cutting-edge AI capabilities. From automation to enhanced customer experiences, the possibilities are endless.

Challenges and Considerations of LLM Development: Insights from an LLM Comparison

Developing Large Language Models (LLMs) is a complex and resource-intensive task that involves numerous challenges and considerations. Comparing LLMs reveals that each model has strengths and weaknesses, but all face common hurdles such as:

1. Data Availability and Quality

One of the most significant challenges in LLM development is the availability of high-quality, diverse datasets. LLMs like GPT-4 and Claude 2 are trained on massive amounts of data from the internet, but the quality of that data can vary. Inconsistent, biased, or unverified information can lead to models that generate inaccurate or biased outputs. For an effective LLM performance comparison, evaluating how each model handles data diversity, bias, and misinformation is crucial.

- Considerations: Ensuring data diversity for different domains (e.g., healthcare, finance). Implementing robust data curation techniques to filter out low-quality or biased data. Balancing the data set size for model performance while avoiding overfitting.

2. Computational Resources and Cost

Training LLMs, especially large-scale models like GPT-4 and Gemini, requires massive computational power. These models demand specialized hardware, like GPUs or TPUs, and significant energy consumption. The high cost of training and maintaining these models is a major barrier, particularly for small to medium-sized LLM development companies.

- Considerations: Investment in cutting-edge hardware and cloud infrastructure for efficient training. Balancing computational efficiency with performance for cost-effective deployment. Exploring more energy-efficient alternatives like Mistral or lightweight models for specific use cases.

3. Model Interpretability and Transparency

As LLMs grow more sophisticated, understanding how they generate responses and make decisions becomes increasingly difficult. This lack of transparency, often referred to as the “black-box” problem, is a significant consideration when developing or deploying LLMs. For industries like cryptocurrency exchanges and smart contract development, where precision and accountability are paramount, ensuring model interpretability is essential.

- Considerations: Developing techniques to interpret and explain model outputs to ensure trustworthiness. Fostering collaboration between AI researchers, developers, and regulatory bodies to establish transparency standards. Using explainable AI (XAI) methods to enhance model transparency.

4. Ethical and Safety Concerns

Ethical challenges remain one of the biggest hurdles in LLM development. Models can unintentionally perpetuate harmful biases or generate unsafe content, leading to public backlash or legal issues. As seen in the comparison of all LLM ethics, models like Claude 2 prioritize safety, making them ideal for regulated industries like healthcare or LLM model development.

- Considerations: Implementing AI safety protocols, including content moderation systems and bias detection. Developing ethical guidelines for deploying LLMs in sensitive areas such as finance, healthcare, and blockchain technology. Continuous monitoring and feedback loops to mitigate risks related to harmful outputs.

In summary, while LLM development brings immense potential, it is fraught with challenges that require careful consideration. By examining LLM comparisons, developers and organizations can better navigate these obstacles, ultimately advancing the field of AI while ensuring safe, efficient, and responsible usage.

An Overview of the Most Notable Large Language Models

The landscape of Large Language Models (LLMs) has evolved rapidly, with each model carving out its niche in creativity, efficiency, or specialization. Below, we break down the best large language models powering today’s AI revolution, from industry giants to open-source innovators.

1. OpenAI’s Dynamic Duo: GPT-4 and GPT-4o

- GPT-4: OpenAI’s flagship multimodal model remains the gold standard for versatility. It processes text and images, excels in complex reasoning, and powers tools like ChatGPT Plus. With a 128k-token context window (GPT-4 Turbo), it handles everything from coding to creative writing but comes with high computational costs.

- GPT-4o: The “optimized” sibling of GPT-4, this model sacrifices none of its predecessor’s prowess while prioritizing speed and cost-efficiency. Ideal for real-time applications like voice assistants or live customer support, GPT-4o democratizes access to high-tier AI without compromising quality.

- Best for: Enterprises needing top-tier performance (GPT-4) and startups prioritizing affordability (GPT-4o).

2. DeepSeek: China’s Rising Star

Developed by DeepSeek AI, this model shines in multilingual proficiency, particularly for Chinese-language tasks. Lightweight and business-focused, it’s a cost-effective solution for e-commerce and customer service in Asia. However, limited global adoption and sparse English documentation hinder its worldwide reach.

- Best for: Businesses targeting Asian markets or needing efficient multilingual support.

3. Anthropic’s Ethical Powerhouses: Claude 2 and Claude 3.5 Sonnet

- Claude 2: Anthropic’s ethical AI champion prioritizes safety and bias mitigation. With superior context retention (100k tokens), it’s a favorite for legal document analysis and long-form content moderation. Its downside? Less “creative flair” compared to GPT-4.

- Claude 3.5 Sonnet: The latest iteration boosts accuracy and nuance handling, making it a go-to for healthcare and finance applications where ethical compliance is critical. While still lagging behind GPT-4 in raw creativity, it closes the gap with improved problem-solving.

- Best for: Regulated industries (healthcare, law, artificial intelligence technology) and teams prioritizing AI safety.

4. Google’s Gemini Family: Bridging Search and AI

- Gemini: Google’s answer to GPT-4 integrates seamlessly with its ecosystem (Search, Workspace, Cloud). Its real-time data access and multimodal skills (text + images) make it ideal for dynamic applications like live market analysis.

- Gemini 1.5 Pro: This upgrade boasts a 1M-token context window—enough to process entire novels—and refines Google’s strength in data-driven tasks. Perfect for enterprises needing to analyze massive datasets or generate real-time reports.

- Best for: Companies embedded in Google’s ecosystem or requiring real-time data integration.

5. Meta’s Open-Source Challengers: Llama 2 and Llama 3.1

- Llama 2: Meta’s open-source pioneer democratized LLM access, offering researchers and startups a free, customizable base model. While less powerful than GPT-4, it is efficiency and transparency fuel academic projects and lightweight apps.

- Llama 3.1: The latest upgrade enhances multilingual support and scalability, bridging the gap between open-source flexibility and enterprise-grade performance. Still requires fine-tuning but now competes with mid-tier proprietary models.

- Best for: Developers and researchers needing transparency and customization.

6. Mistral AI’s Mixtral Series: The Efficiency Experts

- Mixtral 8x7B: This open-source marvel uses a Mixture of Expert (MoE) architecture to deliver GPT-3.5-level performance at half the cost. Its multilingual prowess and scalability make it a dark horse for global enterprises.

- Mixtral 8x22B: The larger sibling doubles down on performance, rivaling GPT-4 in specialized tasks while staying open-source. It’s a heavyweight contender for businesses needing cutting-edge AI without vendor lock-in.

- Best for: Cost-conscious enterprises and developers valuing open-source agility which is essential for artificial intelligence ecosystem integrations.

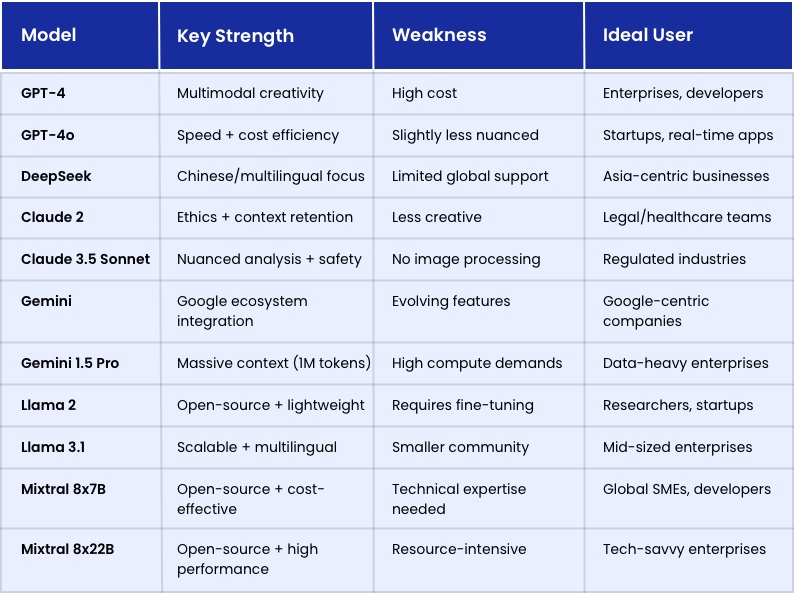

A Side-by-Side Comparison of Diverse LLMs

Here’s a breakdown comparing some well-known large language models, focusing on what makes them tick and how they might be used in different situations:

How to Select the Right Large Language Model for Your Specific Use Case

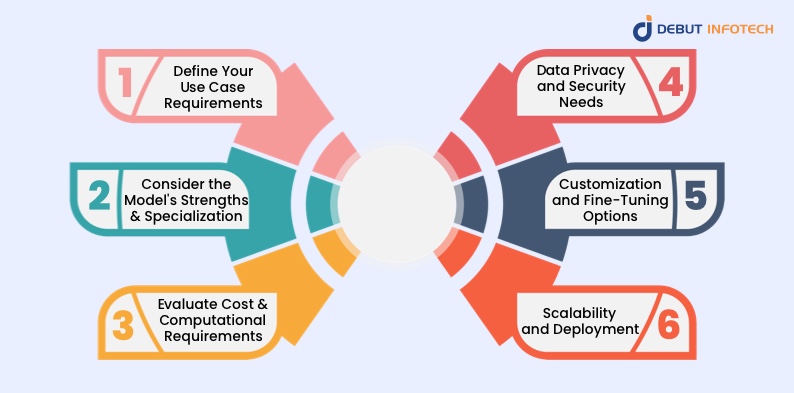

Selecting the right Large Language Model (LLM) for your specific needs is a crucial decision that can impact the performance, cost, and scalability of your AI-powered applications. With so many models available, each with unique strengths and weaknesses, it can be overwhelming to make the best choice. Here’s a step-by-step guide on how to select the most suitable LLM for your use case.

1. Define Your Use Case Requirements

Before diving into the specifics of each LLM, it’s essential to clearly define your use case. What tasks will your model be handling? Whether it’s content generation, customer service, or code writing, understanding the nature of the tasks will help you identify which LLM excels in that domain.

Considerations:

- Is your task conversational, requiring natural language understanding?

- Does it involve technical or specialized knowledge, like AI technology or generative AI?

- Do you need the model to process multimodal inputs, such as text and images? (For example, Gemini excels at multimodal capabilities.)

By defining your needs upfront, you’ll have a better idea of what each model can offer in terms of accuracy, speed, and relevance.

2. Consider the Model’s Strengths and Specialization

Each LLM has its own strengths based on how it was trained and its specific architecture. Some models perform exceptionally well in general-purpose tasks, while others may be more specialized. For instance, the best performing LLM for content creation (GPT-4) differs from the ideal choice for ethical compliance (Claude 3.5 Sonnet).

Considerations:

- If your use case requires high-quality content creation, GPT-4 may be your best option.

- For tasks requiring data retrieval or knowledge extraction, Deepseek could be a better fit.

- If ethical AI is a top priority, Claude 2’s focus on safety and non-bias is essential.

Make sure to match the model’s strengths with the tasks you need it to perform.

3. Evaluate Cost and Computational Requirements

The computational resources required to run LLMs can vary greatly depending on the model’s size and complexity. Models like GPT-4 require significant resources for both training and inference, which might lead to higher operational costs. If cost efficiency is a concern, you may want to consider lightweight models like Mistral suit local LLM rankings, while Gemini 1.5 Pro scales for enterprise needs.

Considerations:

- What is your budget for cloud computing or in-house hardware?

- Does your use case involve real-time or high-frequency requests? Some models, like Mistral, are optimized for lower-latency tasks.

- Can your organization support the computational overhead required by large-scale models?

4. Data Privacy and Security Needs

Data privacy is becoming increasingly important, especially when dealing with sensitive information. For instance, industries like healthcare, finance, and blockchain startups require strict adherence to data protection standards. Some LLMs, such as Claude 2, have built-in safety measures to prevent harmful content generation, while others may offer customizable privacy settings to meet specific security needs.

Considerations:

- What are your data handling and privacy requirements?

- Does the model comply with industry standards like GDPR or HIPAA for data protection?

- Do you need the ability to fine-tune the model using private data securely?

If data security is a high priority, make sure to choose a model that offers the necessary privacy features.

5. Customization and Fine-Tuning Options

For many businesses, the ability to fine-tune an LLM to suit specific needs is a key consideration. Open-source models like LLaMA 3.1 allow large language model development tailored to generative AI development services. If your use case involves specialized language or unique industry terms, having the flexibility to fine-tune the model can significantly improve performance.

Considerations:

- Does the LLM offer tools for fine-tuning or customizing to your specific domain?

- How easy is it to integrate domain-specific datasets into the training process?

- What level of technical expertise is required to fine-tune the model effectively?

Fine-tuning is especially useful in cases where local LLM rankings or specific jargon is critical, such as in blockchain ecosystems or smart contract development.

6. Scalability and Deployment

As your application grows, you’ll need an LLM that can scale with your business. Some models are optimized for cloud-based deployment and can handle high volumes of requests, while others are more suited for on-premise or edge computing. Models like GPT-4 are typically cloud-based, while Mistral and LLaMA are known for being lightweight enough for edge deployment.

Considerations:

- What are your scalability needs?

- Will the model need to handle a large number of simultaneous users or requests?

- Can the model be deployed across multiple environments, such as cloud servers, edge devices, or local machines?

- How easy is it to integrate the model into existing systems and workflows?

Choose an LLM that can grow with your business, whether that means handling more users, expanding to new geographic regions, or integrating with new technologies like blockchain.

Ready to leverage the power of AI?

Partner with Debut Infotech to select, implement, and optimize the right LLM for your business.

How Debut Infotech Can Help You Choose the Right LLM

As a leading LLM development company, Debut Infotech specializes in:

- Expert Consultation

We work closely with you to define your use case and understand your specific requirements, whether for blockchain technology, content generation, or customer support.

- Customized LLM Selection

After evaluating your needs, we perform a comprehensive LLM comparison to recommend the most suitable model, ensuring it meets your performance and scalability goals.

- Fine-Tuning and Integration

We offer LLM fine-tuning to tailor models to your domain, including blockchain ecosystems or any specialized field, and integrate them seamlessly into your infrastructure.

- Scalable Solutions

Whether you’re a startup or an established business, we deliver scalable and cost-effective LLM solutions that grow with your needs.

Unlock the full potential of AI with Debut Infotech. Let us help you select, implement, and optimize the best large language models for your business needs

Frequently Asked Questions (FAQs)

An LLM is a type of artificial intelligence model trained on extensive text data to understand, generate, and process human language. These AI models utilize deep learning architectures, such as transformers, to perform tasks like text generation, translation, summarization, and more.

LLMs operate using neural networks, particularly transformer architectures, which process and analyze vast amounts of text data. Through training, they learn language patterns, context, and semantics, enabling them to generate human-like responses and perform various natural language processing tasks.

Some of the leading LLMs include:

1. GPT-4: Developed by OpenAI, known for its versatility and advanced reasoning capabilities.

2. Claude 3.5 Sonnet: Created by Anthropic, emphasizes ethical AI and safety.

3. Gemini 1.5 Pro: Google’s model, integrates seamlessly with its ecosystem and offers real-time data access.

4. Llama-3.1 405B: Meta’s open-source model, noteable for its scalability and multilingual support.

5. DeepSeek R1: A model from the Chinese company DeepSeek, recognized for its reasoning capabilities and efficiency.

Each model has unique strengths and is suited to different applications.

Selecting the appropriate LLM depends on several factors:

1. Use Case Requirements: Determine the specific tasks you need the model to perform, such as content creation, data analysis, or customer interaction.

2. Model Strengths: Align the model’s capabilities with your needs; for instance, GPT-4 excels in creative tasks, while Claude 3.5 Sonnet focuses on ethical considerations.

3. Resource Availability: Consider the computational resources required and whether you have the infrastructure to support the model.

4. Cost: Evaluate the costs associated with deploying and maintaining the model, including licensing fees and operational expenses.

5. Data Privacy: Ensure the model complies with data protection regulations relevant to your industry.

Assessing these factors will guide you toward the most suitable LLM for your objectives.

Ethical considerations include:

1. Bias Mitigation: Ensuring the model does not perpetuate or amplify biases present in the training data.

2. Content Moderation: Implementing safeguards to prevent the generation of harmful or inappropriate content.

3. Transparency: Being clear about the model’s capabilities and limitations to users.

4. Data Privacy: Protecting user data and complying with relevant privacy laws and regulations.

Models like Claude 3.5 Sonnet prioritize ethical AI practices, making them suitable for applications where these considerations are paramount.

The cost of deploying an LLM varies based on factors such as model complexity, computational requirements, licensing fees, and usage volume. For instance, using GPT-4 can cost up to $15 per 1 million input tokens and $30 per 1 million output tokens, while smaller models may offer more cost-effective options. It’s essential to assess your specific needs and budget constraints when considering deployment.

Yes, many LLMs can be fine-tuned to cater to specific applications or industries. This process involves training the model on specialized datasets to enhance its performance in particular tasks, such as legal document analysis, medical information processing, or technical support. Fine-tuning allows the model to generate more accurate and relevant outputs tailored to specific contexts.

Many LLMs are trained on multilingual datasets, enabling them to understand and generate text in various languages. Models like DeepSeek R1 and Llama-3.1 405B have demonstrated strong multilingual capabilities, making them suitable for applications requiring language diversity.

Talk With Our Expert

Our Latest Insights

USA

2102 Linden LN, Palatine, IL 60067

+1-703-537-5009

[email protected]

UK

Debut Infotech Pvt Ltd

7 Pound Close, Yarnton, Oxfordshire, OX51QG

+44-770-304-0079

[email protected]

Canada

Debut Infotech Pvt Ltd

326 Parkvale Drive, Kitchener, ON N2R1Y7

+1-703-537-5009

[email protected]

INDIA

Debut Infotech Pvt Ltd

C-204, Ground floor, Industrial Area Phase 8B, Mohali, PB 160055

9888402396

[email protected]

Leave a Comment