Table of Contents

Home / Blog / AI/ML

The Impact of Deep Learning on Predictive Analytics

March 27, 2025

March 27, 2025

What if businesses could predict market trends, customer behavior, or supply chain disruptions with near-perfect accuracy?

As businesses increasingly rely on AI algorithms and data-driven strategies to stay ahead, predictive analytics has become a game-changer. This is because the analysis of complex, high-dimensional data proves too difficult for traditional forecasting models because they fail to achieve accurate insights.

Deep learning in predictive analytics, with its ability to recognize intricate patterns and relationships, is redefining predictive analytics. According to a McKinsey report, companies that implement AI-driven predictive models see forecasting error reductions of up to 50%, leading to significant cost savings and operational efficiency. By leveraging convolutional and recurrent neural networks, businesses can improve data forecasting accuracy, reduce overfitting and enable better performance on unseen information.

This article explores deep learning’s predictive analytics influence through its core mechanisms, its best practices for implementation, and real-world applications demonstrating measurable improvements in predictive precision.

Building and Deploying Deep Learning Models for Predictive Analytics

The development of efficient deep learning models for predictive analytics requires a structured approach that emphasizes data quality, appropriate model architecture, and optimization techniques. The following aspects must be analyzed during deep learning model development for predictive analytics applications:

1. Ensuring High-Quality Data

Predictive analytics depends entirely on excellent data quality for achieving accurate results. A survey revealed that 66% of organizations rated their data quality as average, low, or very low, impacting trust in data-driven decisions. Data quality measurement remains absent in 59% of organizations according to Gartner surveys. That makes it difficult to quantify both quality-related expenses and data quality program productivity levels. It’s important to know that partnering with machine learning consulting firms ensures clean, reliable datasets for trustworthy insights in your business.

2. Selecting the Appropriate Model Architecture

The selection of an appropriate model architecture remains essential. Model architectures like Recurrent Neural Networks (RNNs) are effective for sequential data, while Convolutional Neural Networks (CNNs) excel with spatial data. Emerging architectures, like Transformer models, have proven successful for solving complex datasets.

3. Implementing Regularization Techniques

The prevention of overfitting requires two essential regularization methods namely dropout and L2 regularization which are the fundamental elements in any AI tech stack. Through the implementation of these methods, models gain better capability to predict data beyond what was present during training.

4. Utilizing Transfer Learning for Efficiency

Transfer learning enables models to adopt existing pre-trained networks, reducing the need for extensive training from scratch. This approach can significantly cut down training time and resource requirements.

5. Monitoring Key Performance Indicators (KPIs)

The evaluation of KPIs such as accuracy, precision, recall, and F1-score ensures the model meets performance expectations and identifies areas for improvement.

6. Enhancing Feature Engineering

The improvement of model performance happens through data representation enhancement that comes from effective feature engineering techniques such as normalization and one-hot encoding. This is very important to ensure that the model is scalable.

7. Continuous Model Optimization

After deployment a model needs continuous optimization through A/B testing as well as frequent feedback processes to maintain its efficiency. Businesses demonstrate the need for continuous model assessment through the revision of economic forecasts due to inaccuracies.

Take Your Predictive Analytics to the Next Level with Deep Learning

Deep learning is reshaping how businesses predict trends, optimize operations, and stay ahead of the competition. Are you ready to tap into this game-changing technology?

How to Choose the Right Deep Learning Framework for Your Project

The success of a predictive analytics model hinges on selecting the right deep learning framework. With multiple options available, the choice depends on factors like performance, scalability, and ease of integration. Some popular frameworks include:

- TensorFlow: It is widely used for large-scale applications, offering seamless deployment and strong ecosystem support.

- PyTorch: It is preferred for research and experimentation due to its dynamic computation graph and flexibility.

- Keras: It provides a user-friendly API that simplifies model development, making it ideal for rapid prototyping.

- MXNet: Individuals working with AWS can benefit from MXNet framework because it excels in distributed training and is optimized for cloud-based applications.

- JAX: It was developed by Google to deliver high-performance numerical computing with auto-differentiation, making it ideal for advanced research.

Key factors to consider include:

- Ease of Use: If rapid experimentation is a priority, PyTorch’s intuitive approach may be the best fit, while TensorFlow’s structured framework suits production environments.

- Scalability: Handling massive datasets? The distributed computing capabilities of TensorFlow operate best in enterprise applications where resource utilization runs smoothly.

- Community and Support: A strong developer community speeds up troubleshooting and innovation. The large developer pool of TensorFlow amounts to 1.5 million members which provides extensive documentation and abundant resources.

- Optimization Capabilities: The optimization capabilities depend on benchmarking frameworks based on your project’s hardware specifications and requirements. For example, PyTorch’s flexibility benefits research, while TensorFlow optimizes execution speed in real-world deployments.

Efficient and competitive predictive analytics models can be achieved by staying updated with deep learning framework progress and working with appropriate machine learning development companies.

Optimizing Feature Engineering for Deep Learning in Predictive Analytics

Effective feature engineering enhances deep learning models by refining raw data into meaningful inputs that improve predictive accuracy. A deep understanding of important techniques together with their successful application will lead to major performance improvements within deep learning models. Some of these techniques include:

- Ensuring Clean Data: The key step toward clean data involves duplicate removal and consistent data correction and the handling of missing values. Research indicates that data scientists dedicate nearly 80% of their time to data cleaning. This proves how important clean data is in ensuring predictive accuracy.

- Reduce Dimensionality: The use of Principal Component Analysis (PCA) eliminates redundant features, to simplify models while conserving relevant input information which is essential for predictive accuracy.

- Convert Categorical Data: Data scientists improve model interpretability and predictive effectiveness when they transform non-numeric variables into numerical forms using label encoding together with one-hot encoding techniques.

- Extract Key Information: Users can extract vital information from high dimensions through the application of CNNs for image processing or TF-IDF for text data which helps distill high-dimensional inputs into more manageable and meaningful representations.

- Scale Numerical Features: Applying normalization and standardization brings numerical values to a consistent range, improving model stability and accelerating convergence during training.

- Generate New Features: Creating interactions between existing features introduces non-linearity, whuch help models capture deeper insights and improve accuracy.

Model predictions will contain only valuable attributes when researchers conduct regular assessments of feature importance. Automating feature engineering with tools like FeatureTools or AutoML and staying updated on machine learning trends further streamlines the process, minimizing manual effort and improving efficiency.

Enhancing Neural Network Architectures for Accurate Predictions

Designing the right neural network architecture is the key to unlocking deep learning’s full potential in predictive analytics. The difference between an underperforming model and a highly accurate one often lies in strategic optimizations by fine-tuning layers, leveraging pre-trained models, and selecting the right training techniques.

Choosing the Right Architecture

Different tasks require different architectures, and selecting the best one can dramatically boost accuracy:

- Convolutional Neural Networks (CNNs): CNNs dominate in image recognition, achieving over 98% accuracy on CIFAR-10 by effectively capturing spatial patterns.

- Recurrent Neural Networks (RNNs): Essential for time-series and language modeling, LSTMs enhance forecasting accuracy by 15% or more.

- Transformers: Revolutionizing NLP, these models outperform traditional methods by 30% in sentiment analysis and other complex tasks.

Optimizing for Performance

A well-structured neural network isn’t just about architecture, it’s about refining every component for peak efficiency:

- Hyperparameter Tuning: Adjusting hyperparameters such as learning rates, batch sizes, and activation functions plays a pivotal role in enhancing neural network performance. For instance, fine-tuning these parameters can significantly mitigate overfitting, leading to more accurate models.

- Dropout & Batch Normalization: Implementing dropout reduces overfitting by 50%, while batch normalization speeds up training convergence.

- Transfer Learning: Leveraging pre-trained models like BERT or ResNet can cut training time by 50% while maintaining high accuracy.

- Depthwise Separable Convolutions: These techniques shrink model size by up to 90% without sacrificing predictive power.

Monitoring and Fine-Tuning

Constant evaluation and refinement separate good models from great ones:

- Visualization Tools: Integrating TensorBoard into your TensorFlow workflow significantly enhances model debugging and optimization. By visualizing training metrics, computational graphs, and performance profiles, TensorBoard provides comprehensive insights into model behavior, facilitating more efficient and effective development processes.

- Ensemble Learning: Combining multiple models can boost accuracy by 5-10%, leading to more reliable and consistent predictions.

Refining network architectures with these techniques ensures robust, high-performance predictive models across various deep learning applications.

Tackling Imbalanced Datasets in Predictive Analysis Scenarios

Predictive analytics faces substantial obstacles when working with unbalanced data sets since various classes dominate other groups which leads to biasing predictive models that fail to recognize minority classes. To address this issue, several effective strategies have been developed:

1. Resampling Techniques

- Easy Ensemble Method: This approach involves creating multiple subsets from the majority class, each combined with the minority class, and training separate classifiers on each subset. Current research indicates that adopting the Easy Ensemble method leads to important improvements in model execution. In a credit scoring investigation, the minority class recall rate rose from 0.49 to 0.82 through implementation of Easy Ensemble with Random Forest classifiers.

2. Ensemble Learning

- Balanced Random Forest (BRF): The Balanced Random Forest (BRF) enhances standard Random Forest through majority class undersampling of each bootstrap sample to maintain balanced class data distribution. This technique enhances the model’s ability to accurately classify minority instances.

3. Cost-Sensitive Learning

- Class Weight Adjustment: The training procedure uses higher misclassification costs assigned to minority class samples to make the algorithm focus more attention on minority class instances which leads to better recall performance.

4. Evaluation Metrics Beyond Accuracy

- F1 Score: This metric, provides optimal performance evaluation of model classification accuracy for minority classes especially when working with imbalanced datasets.

- ROC-AUC and Precision-Recall Curves: These tools offer deeper insights into model performance across different threshold settings, helping to identify the most appropriate balance between sensitivity and specificity. They are critical for AI algorithms in fraud detection or healthcare.

5. Anomaly Detection Techniques

- Isolation Forest and One-Class SVM: These algorithms are adept at identifying rare events or anomalies, making them suitable for detecting minority class instances in highly imbalanced datasets.By integrating these approaches, deep learning models can make smarter, more inclusive predictions, ensuring every data point counts.

Enhancing Predictive Analytics with AutoML-Driven Deep Learning

Implementing AutoML tools in conjunction with complex model architectures can significantly streamline your workflow in the following ways:

- Accelerated Model Development: AutoML platforms like DataRobot have demonstrated the ability to expedite model development significantly. For instance, the healthcare IT provider Evariant achieved a 10-fold increase in deployment speed, reducing the process from months to weeks.

- Enhanced Predictive Accuracy: The implementation of AutoML has also led to notable improvements in predictive accuracy. Lenovo, for example, enhanced its predictive accuracy from 80% to 87.5% for targeted marketing campaigns, while also reducing model training time from four weeks to three days, resulting in an additional $2 million in sales.

- Operational Efficiency: By automating processes, AutoML contributes to significant operational efficiencies. For instance, Imagia reduced image processing time from 16 hours to just one hour using Google’s AutoML Vision, expediting cancer detection analyses.

- Scalability and Accessibility: AI integration via AutoML democratizes access to advanced analytics by enabling non-experts to develop and deploy models, thereby broadening the scope of predictive analytics applications across various industries.

Incorporating AutoML into deep learning workflows not only accelerates development but also enhances model performance, making predictive analytics more efficient and accessible.

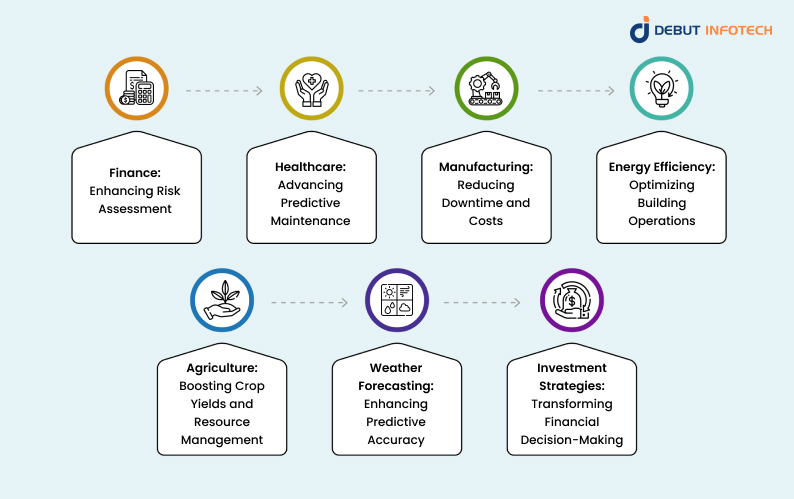

Real-World Impact of Deep Learning in Predictive Analytics

Deep learning is transforming predictive analytics across industries, enhancing efficiency and fostering innovation. The following are areas being impacted:

- Finance: Enhancing Risk Assessment

In the financial sector, deep learning algorithms analyze vast datasets to predict credit risks, enabling more accurate lending decisions and effective fraud detection. AI-powered fintech startups are leveraging these technologies to innovate and streamline financial services, enhancing operational efficiency and customer experience.

- Healthcare: Advancing Predictive Maintenance

In the healthcare sector, predictive maintenance powered by artificial intelligence (AI) is enhancing equipment reliability and reducing operational costs. For instance, Siemens Healthineers implemented AI-driven predictive maintenance for medical imaging equipment, resulting in a 32% reduction in MRI downtime and a 45% improvement in first-time fix rates, leading to average annual savings of $2.1 million per hospital.

- Manufacturing: Reducing Downtime and Costs

Manufacturers are adopting AI-driven predictive maintenance to minimize unplanned downtime and maintenance costs. Implementing such strategies can lead to a 30-50% reduction in unplanned downtime expenses, with predictive models achieving up to 90% accuracy in forecasting equipment malfunctions.

- Energy Efficiency: Optimizing Building Operations

AI is also being utilized to enhance energy efficiency in building operations. For instance, AI can help modernize outdated HVAC systems, leading to significant energy consumption reductions. A case study at 45 Broadway in Manhattan demonstrated that AI from BrainBox AI helped reduce HVAC energy consumption by 15.8%, saving $42,000 annually and cutting 37 metric tons of carbon dioxide.

- Agriculture: Boosting Crop Yields and Resource Management

In agriculture, deep learning predictive analytics enhances precision farming by guiding resource management and improving yields. Predictive models and smart technology help optimize water usage and mitigate climate change effects, contributing to food and water sustainability as population pressures rise.

- Weather Forecasting: Enhancing Predictive Accuracy

DeepMind’s AI weather prediction model, GenCast, has demonstrated high accuracy, outperforming traditional forecasting models. By analyzing four decades of weather data, GenCast provides quicker forecasts with lower computational costs, offering longer advance warnings for events like tropical cyclones.

- Investment Strategies: Transforming Financial Decision-Making

Artificial intelligence is revolutionizing investment management by analyzing vast financial datasets to identify patterns and inform decisions. Over 90% of investment managers are either using or planning to use AI, with 54% already integrating it into their strategies. This adoption enhances decision-making and can significantly improve investment outcomes.

Leveraging Recurrent Neural Networks (RNNs) for Predictive Insights

Recurrent Neural Networks (RNNs) excel in processing sequential data, making them a powerful tool for predictive analytics. Unlike traditional models, RNNs retain information from previous time steps, enabling accurate pattern recognition in time-dependent datasets. Some industries which have benefited from RNNs include:

- Financial Markets: Predicting Stock Prices

RNNs have been effectively applied in financial markets to predict stock prices and market trends. Their ability to process sequential data allows them to analyze historical prices and trading volumes, providing valuable insights for investors.

- Demand Forecasting: Enhancing Accuracy in Retail

In the retail sector, accurate demand forecasting is crucial for inventory management and meeting customer needs. A study demonstrated that LSTM-based models outperformed traditional forecasting methods, achieving higher accuracy in predicting product demand.

- Speech Recognition: Reducing Error Rates

In the field of speech recognition, LSTM networks have significantly improved transcription accuracy. For instance, Google’s implementation of LSTMs in their speech recognition system led to a substantial reduction in transcription errors, enhancing user experience.

Integrating CNNs into predictive frameworks presents a powerful opportunity, as demonstrated by empirical research and their growing adoption across industries. Optimizing model training and validation is essential to unlocking their full potential beyond traditional image-based applications.

Overcoming the Risks of Predictive Deep Learning with Debut Infotech

Despite its transformative potential, predictive deep learning comes with significant challenges. Many AI projects fail due to the following reasons:

1. High Energy Consumption

Training large-scale AI models demands substantial energy resources, raising environmental and sustainability concerns. For instance, advanced AI models like OpenAI’s GPT-4 and Meta’s Llama 3.1 each require around 30 megawatts for training, contributing significantly to electricity consumption.

- Debut Infotech’s Solution: We prioritize energy-efficient AI solutions by adopting optimized algorithms and leveraging cloud-based infrastructures, reducing the environmental footprint without compromising performance.

2. Data Quality and Quantity Issues

The success of deep learning models heavily relies on the availability of large, high-quality datasets. However, many AI projects fail due to inadequate or poor-quality data, leading to inaccurate predictions. Notably, a significant number of AI initiatives do not deliver expected results because of improper model application and unreliable datasets.

- Debut Infotech’s Solution: We implement robust data preprocessing and augmentation techniques, ensuring that our models are trained on clean, comprehensive, and representative datasets to enhance predictive accuracy.

3. Overfitting and Generalization Challenges

Deep learning models often perform exceptionally well on training data but struggle to generalize to unseen data, a problem known as overfitting. This issue can lead to unreliable predictions in real-world applications.

- Debut Infotech’s Solution: Our team employs advanced regularization methods and cross-validation strategies during model training to mitigate overfitting, ensuring that our models maintain high performance on new, unseen data.

4. Explainability and Transparency

The complex nature of deep learning models often results in a “black box” effect, where understanding the decision-making process becomes challenging. This lack of transparency can hinder trust and acceptance, especially in critical sectors like healthcare and finance.

- Debut Infotech’s Solution: We integrate explainable AI (XAI) frameworks into our solutions, providing clear insights into model decisions, which enhances trust and facilitates compliance with regulatory standards.

Optimize Your Predictive Analytics with AI

Need a deep learning solution tailored to your business? Our experts can help you harness AI for smarter, data-driven predictions.

Final Thoughts

Deep learning in predictive analytics is giving businesses a sharper edge, helping them uncover hidden patterns and make smarter decisions backed by data. From adopting AI vs machine learning strategies to predicting customer behavior, its potential is vast. However, challenges like data quality, model interpretability, and high computational costs remain roadblocks to seamless adoption.

That’s where expert guidance makes a difference. At Debut Infotech, we help businesses navigate the complexities of AI, ensuring their deep learning models are not only powerful but also reliable, scalable, and aligned with real-world needs. Whether you’re just starting or looking to refine your AI strategy, our team is here to help.

Let’s build the future of predictive analytics together. Reach out today!

Frequently Asked Questions (FAQs)

A. Yes! Once a deep learning model is trained, it can analyze new data and make accurate predictions. For instance, a model trained to recognize dog images can successfully identify dogs in unseen photos.

A. By leveraging predictive analytics, organizations can uncover hidden patterns in their data to identify risks and seize new opportunities. For example, models can be built to reveal connections between different behavioral factors, providing deeper insights for strategic decision-making.

Talk With Our Expert

Our Latest Insights

USA

2102 Linden LN, Palatine, IL 60067

+1-703-537-5009

[email protected]

UK

Debut Infotech Pvt Ltd

7 Pound Close, Yarnton, Oxfordshire, OX51QG

+44-770-304-0079

[email protected]

Canada

Debut Infotech Pvt Ltd

326 Parkvale Drive, Kitchener, ON N2R1Y7

+1-703-537-5009

[email protected]

INDIA

Debut Infotech Pvt Ltd

C-204, Ground floor, Industrial Area Phase 8B, Mohali, PB 160055

9888402396

[email protected]

Leave a Comment