Table of Contents

Home / Blog / AI/ML

Common AI Development Issues and How to Overcome Them

March 19, 2025

March 19, 2025

Artificial intelligence has reshaped how businesses innovate, yet its development presents a distinct set of challenges compared to traditional software engineering. While conventional development follows structured phases which include planning, design, coding, testing, and deployment, AI projects demand a data-centric approach. Success depends not just on writing code but on acquiring high-quality data, refining it, and ensuring models learn effectively.

This shift in methodology brings its own complex AI development issues, requiring developers to rethink strategies at every stage. From data inconsistencies to algorithmic biases and scalability constraints, AI systems pose hurdles that can impact performance and reliability. Recognizing these challenges is the first step toward building intelligent solutions that deliver value.

Drawing from real-world applications, this article explores the most frequent roadblocks in AI development and provides actionable solutions to navigate them. Whether you’re integrating artificial intelligence technology into an enterprise system or developing a standalone model, understanding these obstacles will help you build smarter, more resilient AI solutions.

Turn AI Challenges into Opportunities

AI can drive innovation and efficiency, but obstacles like data complexity and system integration can slow progress. With the right expertise, you can overcome these challenges and unlock AI’s full potential.

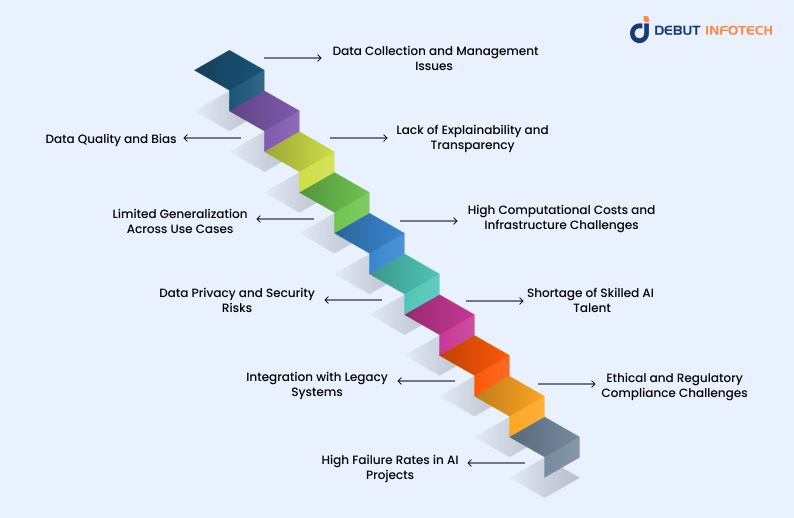

AI Development Issues and Solutions

Below are some of the most pressing AI development challenges and the strategies to tackle them.

1. Data Collection and Management Issues

AI systems are only as good as the data they rely on. Poor data collection processes can lead to unreliable AI models, limiting AI’s effectiveness. Many organizations struggle with fragmented data sources, inconsistent formats, and missing values, making it difficult to develop models that perform accurately in real-world scenarios.

- Solution

The key to solving data collection issues lies in establishing structured data pipelines. Organizations should automate data gathering, implement real-time validation techniques, and adopt standardized data formats to improve consistency. Additionally, leveraging cloud-based data lakes can help manage large-scale datasets efficiently, ensuring AI models have access to accurate and well-organized data.

2. Data Quality and Bias

The effectiveness of an LLM model or any AI system depends on the quality of data it is trained on. If the training dataset contains errors, biases, or lacks diversity, the AI system may produce flawed or unfair results. This is especially problematic in industries like finance, healthcare, and recruitment, where biased AI decisions can lead to serious ethical concerns.

- Solution

To improve data quality, organizations must implement rigorous data preprocessing steps, including data cleaning, augmentation, and normalization. Bias detection tools should be integrated into the AI pipeline to identify and mitigate unfair patterns in data. Furthermore, businesses should source data from diverse demographics to ensure AI agents learn from a balanced and representative dataset.

3. Lack of Explainability and Transparency

Many AI models, especially deep learning systems, operate as “black boxes,” meaning their decision-making process is unclear. This lack of transparency makes it difficult to understand why AI development companies arrived at a particular conclusion, which can reduce trust and make AI adoption challenging in sectors that require accountability, such as finance and healthcare.

- Solution

Explainable AI (XAI) techniques, such as feature importance analysis and model interpretability frameworks like SHAP (Shapley Additive exPlanations) and LIME (Local Interpretable Model-agnostic Explanations), can provide insights into how AI models make decisions. Organizations should also document AI workflows and provide end-users with detailed explanations of AI-generated predictions to enhance trust and transparency.

4. Limited Generalization Across Use Cases

AI models are typically trained on specific datasets and environments. As a result, they often struggle when applied to new scenarios outside their training data. This limitation reduces AI’s adaptability and can lead to poor performance in real-world applications.

- Solution

To solve AI generalization, organizations can implement techniques such as transfer learning, where AI models leverage knowledge from previous tasks to perform better in new situations. Additionally, continuous learning and model retraining with fresh data can help AI systems adapt to dynamic environments, improving their long-term effectiveness.

5. High Computational Costs and Infrastructure Challenges

Developing, training, and deploying AI models require significant computational power. Training complex LLM models or AI copilot development solutions, demand high-performance GPUs and cloud-based resources, which can be expensive for small businesses and startups.

- Solution

Organizations can optimize AI models by using techniques like pruning, quantization, and model distillation, which reduce computational requirements without sacrificing performance. Cloud-based AI platforms also offer scalable solutions, allowing businesses to access high-performance computing resources on a pay-as-you-go basis, reducing infrastructure costs.

6. Data Privacy and Security Risks

AI systems often process large volumes of sensitive information, making them attractive targets for cyberattacks and data breaches. Unauthorized access to AI-driven insights can lead to significant financial and reputational damage. Additionally, AI models must comply with data protection regulations like GDPR and CCPA to avoid legal consequences.

- Solution

Organizations should implement strong encryption techniques, access control mechanisms, and secure data-sharing protocols to protect sensitive information. Federated learning, which is a technique that allows AI models to train on decentralized data without exposing individual records, can also enhance privacy and security. Regular compliance audits and adherence to data protection laws further ensure responsible AI deployment.

7. Shortage of Skilled AI Talent

AI development requires expertise in data science, machine learning, and software engineering. However, there is a significant talent shortage, making it difficult for businesses to find qualified AI professionals. This gap slows down AI adoption and innovation.

- Solution

Companies can address this challenge by upskilling their existing workforce through AI training programs and workshops. Partnering with universities and AI research institutions can also help businesses gain access to top talent. Additionally, businesses can explore AI-as-a-service solutions that provide pre-built AI models, reducing the need for in-house expertise.

8. Integration with Legacy Systems

Many organizations still rely on outdated IT infrastructure that lacks the flexibility to support AI solutions. This results in compatibility issues and inefficient AI implementation.

- Solution

To ensure smooth integration, businesses should use middleware solutions and APIs that allow AI models to interact with legacy systems. Additionally, containerization tools like Docker and Kubernetes enable AI applications to run in isolated environments, making integration with older infrastructure more manageable.

9. Ethical and Regulatory Compliance Challenges

AI decision-making can have ethical implications, especially in sensitive areas like hiring, healthcare, and criminal justice. Regulatory frameworks governing AI use are evolving, and businesses must stay compliant to avoid legal risks.

- Solution

Organizations should implement ethical AI guidelines that promote fairness, accountability, and transparency. Conducting regular AI audits and bias testing can help identify potential ethical risks before deployment. Moreover, companies should establish AI governance teams responsible for ensuring compliance with industry regulations.

10. High Failure Rates in AI Projects

Many AI projects fail due to unrealistic expectations, unclear objectives, or lack of proper planning. Businesses often invest in AI without a clear understanding of how it aligns with their goals, leading to wasted resources.

- Solution

Successful AI projects start with well-defined use cases. Companies should adopt an iterative development approach, where AI models are tested in smaller phases before full-scale deployment. Continuous monitoring, feedback loops, and performance optimization ensure that AI solutions remain effective and valuable over time.

AI is reshaping industries and driving innovation at an unprecedented scale.

Businesses that embrace AI today are setting themselves up for long-term success, while those that hesitate risk falling behind. Are you prepared to overcome AI development challenges and unlock its full potential for your organization?

Final Thoughts

With AI becoming a driving force in business transformation, An AI development company is constantly looking for reliable, scalable, and efficient AI solutions. However, the journey to AI adoption is often riddled with challenges—ranging from data inconsistencies and integration issues to skill shortages and ethical concerns. Overcoming these hurdles requires not just technical expertise but also a deep understanding of industry-specific needs.

At Debut Infotech, we help organizations navigate AI complexities with our expert AI development services. Our team of experts ensures seamless AI integration, helping companies harness the full potential of artificial intelligence while mitigating the risks associated with deployment. Whether it’s AI Chatbot development, AI copilot development, or AI consulting services, the right approach ensures successful deployment.

Embracing AI is no longer a choice but a necessity for businesses aiming to stay competitive in the digital era. Partnering with the right AI development company can make all the difference. Debut Infotech stands as a trusted name in AI innovation, ensuring organizations not only adapt but thrive in this AI-driven world. Contact us today and let’s build the future together.

Frequently Asked Questions (FAQs)

AI development comes with several hurdles that impact efficiency and adoption. Some of the key challenges include:

1. Data quality and bias – Ensuring datasets are clean, diverse, and unbiased.

2. Lack of skilled professionals – AI expertise is in high demand but in short supply.

3. High computational costs – Training AI models requires significant resources.

4. Model interpretability – Making AI decisions more transparent and understandable.

5. Integration issues – Seamlessly incorporating AI into existing workflows.

Addressing AI challenges requires a strategic approach, including:

1. Partnering with experienced AI professionals.

2. Investing in high-quality data management practices.

3. Using scalable cloud-based AI solutions.

4. Implementing explainable AI (XAI) techniques for better transparency.

As AI adoption grows, ethical concerns also emerge, including:

1. Bias in AI models – Preventing unfair decision-making.

2. Data privacy risks – Ensuring user data remains secure.

3. Job displacement – Managing automation’s impact on employment.

4. Security threats – Protecting AI systems from misuse.

Mitigating AI bias involves:

1. Using diverse and representative training datasets.

2. Continuously testing AI models for unintended biases.

3. Implementing fairness-aware machine learning algorithms.

4. Encouraging transparency in AI decision-making.

Scaling AI solutions can be complex due to:

1. High infrastructure costs for training large models.

2. Difficulty in real-time AI processing for big data.

3. Ensuring AI systems remain efficient under heavy loads.

4. Need for ongoing model monitoring and optimization.

AI systems rely on high-quality data to function optimally. Poor data can lead to:

1. Incorrect or biased AI predictions.

2. Inefficient training processes.

3. Reduced model accuracy and reliability.

4. Compliance issues with data regulations.

To stay ahead, companies should:

1. Invest in AI talent and continuous learning.

2. Regularly update and retrain AI models.

3. Adopt flexible and modular AI architectures.

4. Align AI initiatives with evolving business needs.

Small businesses can leverage AI effectively by:

1. Utilizing cloud-based AI solutions to reduce infrastructure costs.

2. Starting with low-code or no-code AI platforms.

3. Partnering with AI-as-a-Service (AIaaS) providers.

4. Focusing on specific AI use cases that bring immediate ROI.

AI systems can be vulnerable to various security threats, such as:

1. Adversarial attacks – Manipulating AI models to produce incorrect results.

2. Data breaches – Exposing sensitive information used in AI training.

3. Model poisoning – Inserting corrupted data to influence AI behavior.

4. AI-generated deep fakes – Creating deceptive content for misinformation

Improving AI interpretability involves:

1. Using Explainable AI (XAI) techniques to clarify model decisions.

2. Implementing decision trees and rule-based systems for simpler AI logic.

3. Providing user-friendly dashboards to visualize AI predictions.

4. Ensuring AI models follow ethical and regulatory guidelines for accountability.

Talk With Our Expert

Our Latest Insights

USA

2102 Linden LN, Palatine, IL 60067

+1-703-537-5009

[email protected]

UK

Debut Infotech Pvt Ltd

7 Pound Close, Yarnton, Oxfordshire, OX51QG

+44-770-304-0079

[email protected]

Canada

Debut Infotech Pvt Ltd

326 Parkvale Drive, Kitchener, ON N2R1Y7

+1-703-537-5009

[email protected]

INDIA

Debut Infotech Pvt Ltd

C-204, Ground floor, Industrial Area Phase 8B, Mohali, PB 160055

9888402396

[email protected]

Leave a Comment