Table of Contents

Home / Blog / AI/ML

Mistakes to Avoid in Generative AI Development

March 27, 2025

March 27, 2025

Generative AI is altering industries through content creation automation, improved decision-making, and streamlined workflow. The global AI market is expected to expand from $214.6 billion in 2024 at a 35.7% CAGR, hitting $1.34 trillion by 2030, demonstrating its quick growth.

But, building and maintaining reliable and efficient AI models is a challenging task. Research shows that over 80% of AI projects fail, surprisingly twice the failure rate of information technology projects that do not incorporate AI. This often occurs due to issues like unclear objectives, poor data quality, and inadequate infrastructure and model evaluation.

It’s crucial for organizations aiming to develop robust and efficient AI systems to understand typical errors plaguing generative AI development. However, by embracing best practices and keeping in mind the potential shortcomings, businesses can make certain that their AI models are not only functional but also responsible, transparent, and aligned with their long-term objectives.

In this article, we will dive into common mistakes that organizations make, along with illustrative examples and solutions on how to overcome them.

One wrong move in AI can set you back months.

Work with AI specialists who know the risks and how to dodge them.

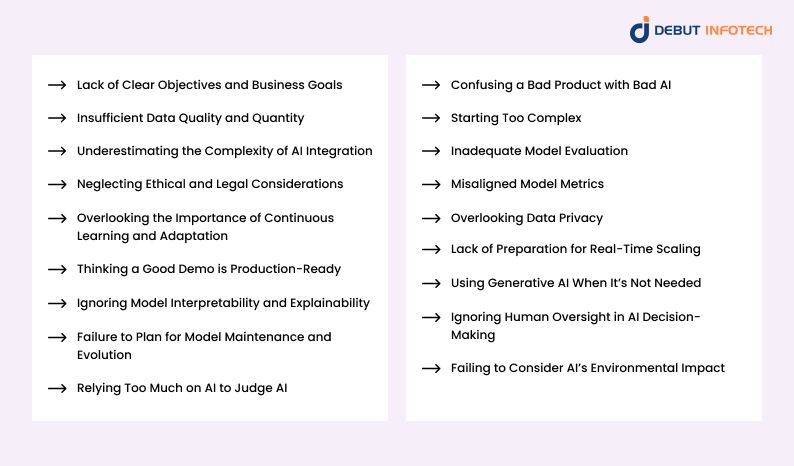

18 Common Mistakes in Generative AI Development and How to Avoid Them

Since generative AI development comes with significant challenges, many teams fall into common pitfalls that hinder AI performance, reliability, and ethical compliance.

Here are some generative AI development pitfalls to avoid:

1. Lack of Clear Objectives and Business Goals

If a generative AI development project has no clear goal, it will be an expensive experiment without impact. Many companies pour money into generative AI without identifying how it could help their business.

An e-commerce company wants to implement AI for personalized recommendations. Still, it doesn’t define its primary goal—boosting sales, improving engagement, or reducing returns. Without clear objectives, the AI model generates random suggestions, frustrating customers and wasting resources.

To avoid this, organizations should set measurable objectives, such as improving content generation efficiency or reducing manual workload by a set percentage. Clear KPIs ensure AI solutions contribute to strategic success rather than existing as isolated tools. Cross-functional collaboration helps align AI development with operational priorities, ensuring practical application.

2. Insufficient Data Quality and Quantity

Generative AI needs large, high-quality datasets. Bad data results in biased outputs, errors, and unreliable performance.

A notorious mistake in generative AI development came in 2016, when Microsoft’s AI chatbot Tay, which was trained on unfiltered social media data, began generating offensive content. That underlines the dangers of training AI based on unverified datasets.

Organizations need to make sure that the data is diverse, well-labelled, and has to be updated regularly. Model reliability can be improved through strict preprocessing steps like data augmentation, noise reduction, and bias detection. In addition, ongoing data audits and feedback loops help maintain accuracy, preventing the model from degrading over time. A well-structured data pipeline improves both training and real-world performance.

3. Underestimating the Complexity of AI Integration

AI is not a plug-and-play solution. Many organizations assume generative AI can seamlessly integrate with existing systems, only to face technical roadblocks.

For instance, integrating AI-powered content generation into a legacy publishing platform may require extensive API development and infrastructure upgrades.

A phased implementation approach helps mitigate risks. Businesses should begin with small-scale deployments, gather feedback, and refine processes before full integration. If businesses decide to hire companies offering generative AI Integration services, they should partner with only reputable ones.

Nevertheless, collaboration between AI engineers, IT teams, and end-users ensures compatibility with existing workflows. Without proper planning, companies risk delays, increased costs, and inefficient AI adoption that disrupts rather than enhances operations.

4. Neglecting Ethical and Legal Considerations

AI-generated content can raise serious ethical concerns, from biased language to deepfake misuse.

In 2023, AI-generated images of politicians circulated online, misleading the public.

To avoid this generative AI development issue, organizations must proactively address ethical risks by implementing fairness audits, bias detection tools, and transparency policies. Compliance with regulations such as GDPR and CCPA ensures responsible data handling.

Developing AI within ethical boundaries involves training models on diverse datasets, setting clear usage policies, and establishing human oversight mechanisms. Companies that fail to prioritize ethical AI risk reputational damage and legal repercussions. Responsible AI development fosters trust and minimizes unintended consequences.

5. Overlooking the Importance of Continuous Learning and Adaptation

AI models degrade over time if not regularly updated. A chatbot trained on outdated data may fail to understand new slang or industry generative AI trends. This makes interactions ineffective.

Netflix continuously updates its recommendation algorithm to reflect changes in user behavior and ensure relevance.

Organizations should implement automated retraining mechanisms using fresh data to prevent performance decline. Monitoring tools can detect model drift, triggering updates before accuracy suffers. Without continuous learning, generative AI solutions become obsolete, reducing their effectiveness. Regular updates and user feedback integration align AI models with evolving needs, maintaining their value over time.

6. Thinking a Good Demo is Production-Ready

A well-polished generative AI demo often performs under controlled conditions but fails in real-world applications.

A startup showcases an AI-generated art tool that performs well in controlled demos but fails in real-world scenarios due to increased demand and varied inputs.

Overfitting to a limited dataset can create misleading confidence in AI capabilities and general generative AI development. Rigorous real-world testing, including stress tests, edge case analysis, and user interactions, is essential before deployment.

With the help of an experienced AI development company, organizations should simulate diverse scenarios, ensuring AI performs reliably under varying conditions. A staged rollout, starting with beta users before scaling, helps uncover flaws early. Relying on a demo without extensive testing leads to underperforming AI applications.

7. Ignoring Model Interpretability and Explainability

Black-box AI systems limit trust and accountability. If a generative AI model recommends denying a loan, financial institutions must justify the decision.

Without explainability, users cannot verify model fairness or detect biases.

Implementing interpretability techniques, such as SHAP values or LIME, helps reveal how AI reaches conclusions. The healthcare sector, for example, uses explainable AI to validate diagnostic models, ensuring physicians understand AI-generated insights. Transparent AI models foster user trust and regulatory compliance. Organizations should prioritize model interpretability by integrating visualization tools, clear documentation, and decision-tracing mechanisms. This enhances accountability and facilitates responsible AI adoption.

8. Failure to Plan for Model Maintenance and Evolution

AI systems require continuous oversight to remain effective. Google’s search algorithms are updated frequently to adapt to changing user behavior.

Without similar maintenance, generative AI models may produce outdated or irrelevant outputs. Organizations should establish long-term support plans, including scheduled retraining, version control, and performance monitoring. A dedicated team should track model health using validation metrics, such as accuracy and response coherence.

Ignoring maintenance results in declining model quality, security vulnerabilities, and user dissatisfaction. AI projects must be treated as ongoing initiatives, not one-time deployments, to ensure sustained effectiveness and adaptability to new demands.

9. Relying Too Much on AI to Judge AI

Automated AI evaluation can overlook critical errors. AI-generated translations, for example, may appear grammatically correct but misinterpret cultural nuances.

Human oversight is crucial to identifying these flaws. In cybersecurity, human analysts must validate AI-powered threat detection to ensure accuracy.

Organizations should balance AI-driven validation with manual review, combining algorithmic efficiency with expert judgment. A hybrid approach—where AI handles large-scale analysis, and humans verify complex cases—improves reliability. Relying solely on AI for evaluation risks reinforcing biases, increasing error rates, and reducing accountability.

10. Confusing a Bad Product with Bad AI

Not all failures stem from AI itself. A poorly designed user interface can make an AI-driven tool feel unreliable, even if the model functions correctly.

A food delivery app integrates AI for estimated delivery times. Still, delays arise due to poor logistics rather than AI failure. Blaming AI distracts from fixing the actual issue—inefficient routing. Understanding the root cause prevents the misattribution of errors.

Organizations should conduct usability testing to differentiate AI limitations from broader product design flaws. Ensuring seamless human-AI interaction—through intuitive UI/UX design, proper error handling, and user education—improves adoption. AI should be one component of a well-integrated system, not the sole factor determining product success.

11. Starting Too Complex

Many teams attempt to build highly sophisticated generative AI models from the outset. This leads to delays, high costs, and implementation challenges.

A retailer wants to use AI for customer support and starts with an advanced deep-learning chatbot. The project becomes costly and difficult to manage. A simpler rule-based chatbot could have been a practical first step before scaling.

Instead of overengineering solutions, businesses should start with simpler, interpretable models, refining them based on real-world performance. A modular approach—beginning with rule-based AI or smaller-scale generative models—allows for incremental improvements. Simplifying early adaptive AI development ensures faster deployment, lower costs, and more manageable debugging. AI projects should evolve naturally, scaling complexity as they demonstrate reliability and value.

12. Inadequate Model Evaluation

Flawed evaluation methods lead to misleading AI performance insights.

An AI-based resume screening tool passed internal testing but later exhibited bias against female candidates in real-world applications.

Teams often rely solely on accuracy metrics, ignoring fairness, robustness, and real-world adaptability. Comprehensive model evaluation should incorporate adversarial testing, stress testing, and cross-validation with diverse datasets. Continuous evaluation—using real-world feedback and performance monitoring—ensures AI models remain effective beyond controlled testing environments.

Without thorough evaluation, AI solutions risk failure upon deployment, damaging business credibility and user trust.

13. Misaligned Model Metrics

Using inappropriate performance metrics can mislead generative AI development. A chatbot optimized for speed may generate fast but irrelevant responses. This experience can frustrate users.

A generative AI tool designed for news summarization was trained solely on word count reduction, leading to misleading article summaries.

Generative AI development companies must define evaluation metrics that align with business goals, such as response relevance, coherence, and user satisfaction. Balancing multiple metrics—accuracy, efficiency, and fairness—ensures AI meets practical needs. Regularly revising these metrics based on evolving requirements keeps AI performance aligned with organizational objectives.

14. Overlooking Data Privacy

Notably, AI systems that involve sensitive data will be subject to privacy regulations.

A fitness app collects user health data to personalize recommendations but lacks clear consent mechanisms. Users become concerned about privacy risks and take legal action.

Any business that fails to protect user data privacy can suffer regulatory fines and severe reputational damage.

So, businesses should implement strict data anonymization, encryption, and access control measures. It is also important to be compliant with laws like GDPR and CCPA. Also, establishing transparency through clear data usage policies builds trust. If they are stuck at any point, businesses can seek guidance from reputable generative AI consultants.

Organizations should regularly audit data pipelines to ensure secure handling, preventing potential legal and ethical violations.

15. Lack of Preparation for Real-Time Scaling

Many AI projects work well in development but fail under real-world demand.

An AI-powered video generator gains popularity overnight, but the system crashes due to traffic overload.

Without scalable infrastructure, AI systems suffer from slow performance, downtime, or increased costs. Businesses should anticipate scaling needs by leveraging cloud-based solutions, distributed computing, and optimized model deployment techniques. Load testing under simulated peak conditions helps identify potential bottlenecks. Planning for scalability from the outset prevents performance failures, ensuring AI solutions remain responsive and cost-efficient as demand grows.

16. Using Generative AI When It’s Not Needed

Not every problem requires generative AI. Some companies invest in complex AI solutions when simpler rule-based automation would be more efficient.

A company automates simple customer FAQs using a generative AI model when a structured knowledge base would have sufficed. This results in higher costs with no added value.

Before venturing into generative AI development, businesses should evaluate whether traditional algorithms, predefined automation, or human-driven processes could achieve the same results more effectively. AI should complement existing workflows, not complicate them. Strategic AI implementation ensures resources are used wisely, maximizing efficiency without unnecessary complexity.

17. Ignoring Human Oversight in AI Decision-Making

Using AI without human intervention can cause unintentional consequences.

For instance, if a content moderation AI flags harmless posts as though they violated community standards, this results in unnecessary removals, annoys users, and damages the image of the platform. Without human review, critical errors can easily slip through.

AI is powerful but lacks human judgment, context awareness, and ethical reasoning. Because of this, organizations must embrace human-in-the-loop (HITL) systems that vet AI suggestions before they get executed. This approach balances efficiency with accuracy, preventing costly errors.

Regular audits and human validation ensure AI outputs align with business values and ethical considerations. AI should assist decision-making, not replace critical human oversight.

18. Failing to Consider AI’s Environmental Impact

Training large AI models consumes vast amounts of energy, leading to significant carbon emissions. Many companies overlook this aspect, prioritizing model performance over sustainability. AI’s environmental footprint grows as models become more complex and require extensive computational power.

A company trains a massive generative AI model without optimizing resource usage, consuming as much electricity as several hundred homes over a year. This results in high costs and environmental impact.

Use energy-efficient generative AI frameworks

and architectures, optimize computational resources and adopt green AI practices, such as using renewable energy sources and minimizing unnecessary model retraining. Balancing performance with sustainability ensures responsible AI development.

Don’t let rookie AI mistakes derail your project.

Work with seasoned AI developers who know the pitfalls and how to sidestep them.

Conclusion

To avoid common pitfalls in generative AI development, organizations must stay vigilant and proactive. Success in generative AI development depends on having a clear objective, high-quality data, and a defined strategy. Businesses should ensure that AI models remain up-to-date, explainable, and properly integrated into real-world applications,

Neglecting these elements can produce inefficient, biased, or outright dangerous results. By combining technical expertise with responsible AI governance, organizations can harness the full potential of generative AI while mitigating risks.

A well-executed AI strategy doesn’t just enhance efficiency—it builds trust, drives innovation, and ensures sustainable growth in an increasingly AI-driven world.

FAQs

Keeping AI in check means setting strict rules, monitoring its outputs, and making sure it follows ethical guidelines. Developers use things like guardrails, moderation filters, and human oversight to stop it from going rogue. The goal? Keep it useful and safe without letting it run wild.

Generative AI faces hurdles like high costs, data bias, and the sheer complexity of making it reliable. It also needs massive computing power, and not everyone trusts it yet. Plus, businesses worry about security, accuracy, and whether AI-generated content will actually help or just add more noise.

Copyright is a big one—who owns AI-generated content? Then there’s misinformation, defamation, and deepfakes, all of which can cause legal headaches. Privacy laws also come into play, especially if AI is trained on personal data. Governments and courts are still figuring out the rules.

Hands are tricky because they’re complex—tons of joints, different positions, and weird angles. AI struggles because training data often doesn’t have enough high-quality hand images. Plus, minor errors get magnified, leading to extra fingers or weirdly bent thumbs. It’s getting better, but it’s still not perfect.

The biggest concern is AI learning from personal data without consent. If it scrapes info from the web, it might spit out private details. Plus, companies using AI need to protect user data from leaks or misuse. The challenge is balancing innovation with respecting people’s privacy.

Talk With Our Expert

Our Latest Insights

USA

2102 Linden LN, Palatine, IL 60067

+1-703-537-5009

[email protected]

UK

Debut Infotech Pvt Ltd

7 Pound Close, Yarnton, Oxfordshire, OX51QG

+44-770-304-0079

[email protected]

Canada

Debut Infotech Pvt Ltd

326 Parkvale Drive, Kitchener, ON N2R1Y7

+1-703-537-5009

[email protected]

INDIA

Debut Infotech Pvt Ltd

C-204, Ground floor, Industrial Area Phase 8B, Mohali, PB 160055

9888402396

[email protected]

Leave a Comment